退购1.1定位算法

This commit is contained in:

85

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

Normal file

85

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

Normal file

@ -0,0 +1,85 @@

|

||||

name: 🐛 Bug Report

|

||||

# title: " "

|

||||

description: Problems with YOLOv8

|

||||

labels: [bug, triage]

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thank you for submitting a YOLOv8 🐛 Bug Report!

|

||||

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Search before asking

|

||||

description: >

|

||||

Please search the [issues](https://github.com/ultralytics/ultralytics/issues) to see if a similar bug report already exists.

|

||||

options:

|

||||

- label: >

|

||||

I have searched the YOLOv8 [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar bug report.

|

||||

required: true

|

||||

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: YOLOv8 Component

|

||||

description: |

|

||||

Please select the part of YOLOv8 where you found the bug.

|

||||

multiple: true

|

||||

options:

|

||||

- "Training"

|

||||

- "Validation"

|

||||

- "Detection"

|

||||

- "Export"

|

||||

- "PyTorch Hub"

|

||||

- "Multi-GPU"

|

||||

- "Evolution"

|

||||

- "Integrations"

|

||||

- "Other"

|

||||

validations:

|

||||

required: false

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Bug

|

||||

description: Provide console output with error messages and/or screenshots of the bug.

|

||||

placeholder: |

|

||||

💡 ProTip! Include as much information as possible (screenshots, logs, tracebacks etc.) to receive the most helpful response.

|

||||

validations:

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Environment

|

||||

description: Please specify the software and hardware you used to produce the bug.

|

||||

placeholder: |

|

||||

- YOLO: Ultralytics YOLOv8.0.21 🚀 Python-3.8.10 torch-1.13.1+cu117 CUDA:0 (A100-SXM-80GB, 81251MiB)

|

||||

- OS: Ubuntu 20.04

|

||||

- Python: 3.8.10

|

||||

validations:

|

||||

required: false

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Minimal Reproducible Example

|

||||

description: >

|

||||

When asking a question, people will be better able to provide help if you provide code that they can easily understand and use to **reproduce** the problem.

|

||||

This is referred to by community members as creating a [minimal reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/).

|

||||

placeholder: |

|

||||

```

|

||||

# Code to reproduce your issue here

|

||||

```

|

||||

validations:

|

||||

required: false

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Additional

|

||||

description: Anything else you would like to share?

|

||||

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Are you willing to submit a PR?

|

||||

description: >

|

||||

(Optional) We encourage you to submit a [Pull Request](https://github.com/ultralytics/ultralytics/pulls) (PR) to help improve YOLOv8 for everyone, especially if you have a good understanding of how to implement a fix or feature.

|

||||

See the YOLOv8 [Contributing Guide](https://docs.ultralytics.com/help/contributing) to get started.

|

||||

options:

|

||||

- label: Yes I'd like to help by submitting a PR!

|

||||

11

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

11

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@ -0,0 +1,11 @@

|

||||

blank_issues_enabled: true

|

||||

contact_links:

|

||||

- name: 📄 Docs

|

||||

url: https://docs.ultralytics.com/

|

||||

about: Full Ultralytics YOLOv8 Documentation

|

||||

- name: 💬 Forum

|

||||

url: https://community.ultralytics.com/

|

||||

about: Ask on Ultralytics Community Forum

|

||||

- name: 🎧 Discord

|

||||

url: https://discord.gg/n6cFeSPZdD

|

||||

about: Ask on Ultralytics Discord

|

||||

50

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

Normal file

50

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

Normal file

@ -0,0 +1,50 @@

|

||||

name: 🚀 Feature Request

|

||||

description: Suggest a YOLOv8 idea

|

||||

# title: " "

|

||||

labels: [enhancement]

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thank you for submitting a YOLOv8 🚀 Feature Request!

|

||||

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Search before asking

|

||||

description: >

|

||||

Please search the [issues](https://github.com/ultralytics/ultralytics/issues) to see if a similar feature request already exists.

|

||||

options:

|

||||

- label: >

|

||||

I have searched the YOLOv8 [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar feature requests.

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Description

|

||||

description: A short description of your feature.

|

||||

placeholder: |

|

||||

What new feature would you like to see in YOLOv8?

|

||||

validations:

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Use case

|

||||

description: |

|

||||

Describe the use case of your feature request. It will help us understand and prioritize the feature request.

|

||||

placeholder: |

|

||||

How would this feature be used, and who would use it?

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Additional

|

||||

description: Anything else you would like to share?

|

||||

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Are you willing to submit a PR?

|

||||

description: >

|

||||

(Optional) We encourage you to submit a [Pull Request](https://github.com/ultralytics/ultralytics/pulls) (PR) to help improve YOLOv8 for everyone, especially if you have a good understanding of how to implement a fix or feature.

|

||||

See the YOLOv8 [Contributing Guide](https://docs.ultralytics.com/help/contributing) to get started.

|

||||

options:

|

||||

- label: Yes I'd like to help by submitting a PR!

|

||||

33

.github/ISSUE_TEMPLATE/question.yml

vendored

Normal file

33

.github/ISSUE_TEMPLATE/question.yml

vendored

Normal file

@ -0,0 +1,33 @@

|

||||

name: ❓ Question

|

||||

description: Ask a YOLOv8 question

|

||||

# title: " "

|

||||

labels: [question]

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thank you for asking a YOLOv8 ❓ Question!

|

||||

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Search before asking

|

||||

description: >

|

||||

Please search the [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/ultralytics/ultralytics/discussions) to see if a similar question already exists.

|

||||

options:

|

||||

- label: >

|

||||

I have searched the YOLOv8 [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/ultralytics/ultralytics/discussions) and found no similar questions.

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Question

|

||||

description: What is your question?

|

||||

placeholder: |

|

||||

💡 ProTip! Include as much information as possible (screenshots, logs, tracebacks etc.) to receive the most helpful response.

|

||||

validations:

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Additional

|

||||

description: Anything else you would like to share?

|

||||

28

.github/dependabot.yml

vendored

Normal file

28

.github/dependabot.yml

vendored

Normal file

@ -0,0 +1,28 @@

|

||||

# To get started with Dependabot version updates, you'll need to specify which

|

||||

# package ecosystems to update and where the package manifests are located.

|

||||

# Please see the documentation for all configuration options:

|

||||

# https://docs.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

|

||||

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: pip

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: weekly

|

||||

time: "04:00"

|

||||

open-pull-requests-limit: 10

|

||||

reviewers:

|

||||

- glenn-jocher

|

||||

labels:

|

||||

- dependencies

|

||||

|

||||

- package-ecosystem: github-actions

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: weekly

|

||||

time: "04:00"

|

||||

open-pull-requests-limit: 5

|

||||

reviewers:

|

||||

- glenn-jocher

|

||||

labels:

|

||||

- dependencies

|

||||

26

.github/translate-readme.yml

vendored

Normal file

26

.github/translate-readme.yml

vendored

Normal file

@ -0,0 +1,26 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

# README translation action to translate README.md to Chinese as README.zh-CN.md on any change to README.md

|

||||

|

||||

name: Translate README

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- translate_readme # replace with 'main' to enable action

|

||||

paths:

|

||||

- README.md

|

||||

|

||||

jobs:

|

||||

Translate:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Setup Node.js

|

||||

uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

# ISO Language Codes: https://cloud.google.com/translate/docs/languages

|

||||

- name: Adding README - Chinese Simplified

|

||||

uses: dephraiim/translate-readme@main

|

||||

with:

|

||||

LANG: zh-CN

|

||||

209

.github/workflows/ci.yaml

vendored

Normal file

209

.github/workflows/ci.yaml

vendored

Normal file

@ -0,0 +1,209 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

# YOLO Continuous Integration (CI) GitHub Actions tests

|

||||

|

||||

name: Ultralytics CI

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

pull_request:

|

||||

branches: [main]

|

||||

schedule:

|

||||

- cron: '0 0 * * *' # runs at 00:00 UTC every day

|

||||

|

||||

jobs:

|

||||

HUB:

|

||||

if: github.repository == 'ultralytics/ultralytics' && (github.event_name == 'schedule' || github.event_name == 'push')

|

||||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

os: [ubuntu-latest]

|

||||

python-version: ['3.10']

|

||||

model: [yolov5n]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: 'pip' # caching pip dependencies

|

||||

- name: Install requirements

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

python -m pip install --upgrade pip wheel

|

||||

pip install -e . --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

- name: Check environment

|

||||

run: |

|

||||

echo "RUNNER_OS is ${{ runner.os }}"

|

||||

echo "GITHUB_EVENT_NAME is ${{ github.event_name }}"

|

||||

echo "GITHUB_WORKFLOW is ${{ github.workflow }}"

|

||||

echo "GITHUB_ACTOR is ${{ github.actor }}"

|

||||

echo "GITHUB_REPOSITORY is ${{ github.repository }}"

|

||||

echo "GITHUB_REPOSITORY_OWNER is ${{ github.repository_owner }}"

|

||||

python --version

|

||||

pip --version

|

||||

pip list

|

||||

- name: Test HUB training

|

||||

shell: python

|

||||

env:

|

||||

API_KEY: ${{ secrets.ULTRALYTICS_HUB_API_KEY }}

|

||||

MODEL_ID: ${{ secrets.ULTRALYTICS_HUB_MODEL_ID }}

|

||||

run: |

|

||||

import os

|

||||

from ultralytics import YOLO, hub

|

||||

api_key, model_id = os.environ['API_KEY'], os.environ['MODEL_ID']

|

||||

hub.login(api_key)

|

||||

hub.reset_model(model_id)

|

||||

model = YOLO('https://hub.ultralytics.com/models/' + model_id)

|

||||

model.train()

|

||||

|

||||

Benchmarks:

|

||||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

os: [ubuntu-latest]

|

||||

python-version: ['3.10']

|

||||

model: [yolov8n]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: 'pip' # caching pip dependencies

|

||||

- name: Install requirements

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

python -m pip install --upgrade pip wheel

|

||||

if [ "${{ matrix.os }}" == "macos-latest" ]; then

|

||||

pip install -e '.[export]' --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

else

|

||||

pip install -e '.[export]' --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

fi

|

||||

yolo export format=tflite imgsz=32

|

||||

- name: Check environment

|

||||

run: |

|

||||

echo "RUNNER_OS is ${{ runner.os }}"

|

||||

echo "GITHUB_EVENT_NAME is ${{ github.event_name }}"

|

||||

echo "GITHUB_WORKFLOW is ${{ github.workflow }}"

|

||||

echo "GITHUB_ACTOR is ${{ github.actor }}"

|

||||

echo "GITHUB_REPOSITORY is ${{ github.repository }}"

|

||||

echo "GITHUB_REPOSITORY_OWNER is ${{ github.repository_owner }}"

|

||||

python --version

|

||||

pip --version

|

||||

pip list

|

||||

- name: Benchmark DetectionModel

|

||||

shell: python

|

||||

run: |

|

||||

from ultralytics.yolo.utils.benchmarks import benchmark

|

||||

benchmark(model='${{ matrix.model }}.pt', imgsz=160, half=False, hard_fail=0.20)

|

||||

- name: Benchmark SegmentationModel

|

||||

shell: python

|

||||

run: |

|

||||

from ultralytics.yolo.utils.benchmarks import benchmark

|

||||

benchmark(model='${{ matrix.model }}-seg.pt', imgsz=160, half=False, hard_fail=0.14)

|

||||

- name: Benchmark ClassificationModel

|

||||

shell: python

|

||||

run: |

|

||||

from ultralytics.yolo.utils.benchmarks import benchmark

|

||||

benchmark(model='${{ matrix.model }}-cls.pt', imgsz=160, half=False, hard_fail=0.61)

|

||||

- name: Benchmark PoseModel

|

||||

shell: python

|

||||

run: |

|

||||

from ultralytics.yolo.utils.benchmarks import benchmark

|

||||

benchmark(model='${{ matrix.model }}-pose.pt', imgsz=160, half=False, hard_fail=0.0)

|

||||

- name: Benchmark Summary

|

||||

run: |

|

||||

cat benchmarks.log

|

||||

echo "$(cat benchmarks.log)" >> $GITHUB_STEP_SUMMARY

|

||||

|

||||

Tests:

|

||||

timeout-minutes: 60

|

||||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

os: [ubuntu-latest]

|

||||

python-version: ['3.7', '3.8', '3.9', '3.10']

|

||||

model: [yolov8n]

|

||||

torch: [latest]

|

||||

include:

|

||||

- os: ubuntu-latest

|

||||

python-version: '3.8' # torch 1.7.0 requires python >=3.6, <=3.8

|

||||

model: yolov8n

|

||||

torch: '1.8.0' # min torch version CI https://pypi.org/project/torchvision/

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: 'pip' # caching pip dependencies

|

||||

- name: Install requirements

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

python -m pip install --upgrade pip wheel

|

||||

if [ "${{ matrix.torch }}" == "1.8.0" ]; then

|

||||

pip install -e . torch==1.8.0 torchvision==0.9.0 pytest --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

else

|

||||

pip install -e . pytest --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

fi

|

||||

- name: Check environment

|

||||

run: |

|

||||

echo "RUNNER_OS is ${{ runner.os }}"

|

||||

echo "GITHUB_EVENT_NAME is ${{ github.event_name }}"

|

||||

echo "GITHUB_WORKFLOW is ${{ github.workflow }}"

|

||||

echo "GITHUB_ACTOR is ${{ github.actor }}"

|

||||

echo "GITHUB_REPOSITORY is ${{ github.repository }}"

|

||||

echo "GITHUB_REPOSITORY_OWNER is ${{ github.repository_owner }}"

|

||||

python --version

|

||||

pip --version

|

||||

pip list

|

||||

- name: Test Detect

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

yolo detect train data=coco8.yaml model=yolov8n.yaml epochs=1 imgsz=32

|

||||

yolo detect train data=coco8.yaml model=yolov8n.pt epochs=1 imgsz=32

|

||||

yolo detect val data=coco8.yaml model=runs/detect/train/weights/last.pt imgsz=32

|

||||

yolo detect predict model=runs/detect/train/weights/last.pt imgsz=32 source=ultralytics/assets/bus.jpg

|

||||

yolo export model=runs/detect/train/weights/last.pt imgsz=32 format=torchscript

|

||||

- name: Test Segment

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

yolo segment train data=coco8-seg.yaml model=yolov8n-seg.yaml epochs=1 imgsz=32

|

||||

yolo segment train data=coco8-seg.yaml model=yolov8n-seg.pt epochs=1 imgsz=32

|

||||

yolo segment val data=coco8-seg.yaml model=runs/segment/train/weights/last.pt imgsz=32

|

||||

yolo segment predict model=runs/segment/train/weights/last.pt imgsz=32 source=ultralytics/assets/bus.jpg

|

||||

yolo export model=runs/segment/train/weights/last.pt imgsz=32 format=torchscript

|

||||

- name: Test Classify

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

yolo classify train data=imagenet10 model=yolov8n-cls.yaml epochs=1 imgsz=32

|

||||

yolo classify train data=imagenet10 model=yolov8n-cls.pt epochs=1 imgsz=32

|

||||

yolo classify val data=imagenet10 model=runs/classify/train/weights/last.pt imgsz=32

|

||||

yolo classify predict model=runs/classify/train/weights/last.pt imgsz=32 source=ultralytics/assets/bus.jpg

|

||||

yolo export model=runs/classify/train/weights/last.pt imgsz=32 format=torchscript

|

||||

- name: Test Pose

|

||||

shell: bash # for Windows compatibility

|

||||

run: |

|

||||

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.yaml epochs=1 imgsz=32

|

||||

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.pt epochs=1 imgsz=32

|

||||

yolo pose val data=coco8-pose.yaml model=runs/pose/train/weights/last.pt imgsz=32

|

||||

yolo pose predict model=runs/pose/train/weights/last.pt imgsz=32 source=ultralytics/assets/bus.jpg

|

||||

yolo export model=runs/pose/train/weights/last.pt imgsz=32 format=torchscript

|

||||

- name: Pytest tests

|

||||

shell: bash # for Windows compatibility

|

||||

run: pytest tests

|

||||

|

||||

Summary:

|

||||

runs-on: ubuntu-latest

|

||||

needs: [HUB, Benchmarks, Tests] # Add job names that you want to check for failure

|

||||

if: always() # This ensures the job runs even if previous jobs fail

|

||||

steps:

|

||||

- name: Check for failure and notify

|

||||

if: (needs.HUB.result == 'failure' || needs.Benchmarks.result == 'failure' || needs.Tests.result == 'failure') && github.repository == 'ultralytics/ultralytics' && (github.event_name == 'schedule' || github.event_name == 'push')

|

||||

uses: slackapi/slack-github-action@v1.23.0

|

||||

with:

|

||||

payload: |

|

||||

{"text": "<!channel> GitHub Actions error for ${{ github.workflow }} ❌\n\n\n*Repository:* https://github.com/${{ github.repository }}\n*Action:* https://github.com/${{ github.repository }}/actions/runs/${{ github.run_id }}\n*Author:* ${{ github.actor }}\n*Event:* ${{ github.event_name }}\n"}

|

||||

env:

|

||||

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL_YOLO }}

|

||||

37

.github/workflows/cla.yml

vendored

Normal file

37

.github/workflows/cla.yml

vendored

Normal file

@ -0,0 +1,37 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

|

||||

name: CLA Assistant

|

||||

on:

|

||||

issue_comment:

|

||||

types:

|

||||

- created

|

||||

pull_request_target:

|

||||

types:

|

||||

- reopened

|

||||

- opened

|

||||

- synchronize

|

||||

|

||||

jobs:

|

||||

CLA:

|

||||

if: github.repository == 'ultralytics/ultralytics'

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: CLA Assistant

|

||||

if: (github.event.comment.body == 'recheck' || github.event.comment.body == 'I have read the CLA Document and I sign the CLA') || github.event_name == 'pull_request_target'

|

||||

uses: contributor-assistant/github-action@v2.3.0

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# must be repository secret token

|

||||

PERSONAL_ACCESS_TOKEN: ${{ secrets.PERSONAL_ACCESS_TOKEN }}

|

||||

with:

|

||||

path-to-signatures: 'signatures/version1/cla.json'

|

||||

path-to-document: 'https://docs.ultralytics.com/help/CLA' # CLA document

|

||||

# branch should not be protected

|

||||

branch: 'main'

|

||||

allowlist: dependabot[bot],github-actions,[pre-commit*,pre-commit*,bot*

|

||||

|

||||

remote-organization-name: ultralytics

|

||||

remote-repository-name: cla

|

||||

custom-pr-sign-comment: 'I have read the CLA Document and I sign the CLA'

|

||||

custom-allsigned-prcomment: All Contributors have signed the CLA. ✅

|

||||

#custom-notsigned-prcomment: 'pull request comment with Introductory message to ask new contributors to sign'

|

||||

41

.github/workflows/codeql.yaml

vendored

Normal file

41

.github/workflows/codeql.yaml

vendored

Normal file

@ -0,0 +1,41 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

|

||||

name: "CodeQL"

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: '0 0 1 * *'

|

||||

|

||||

jobs:

|

||||

analyze:

|

||||

name: Analyze

|

||||

runs-on: ${{ 'ubuntu-latest' }}

|

||||

permissions:

|

||||

actions: read

|

||||

contents: read

|

||||

security-events: write

|

||||

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

language: [ 'python' ]

|

||||

# CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v3

|

||||

|

||||

# Initializes the CodeQL tools for scanning.

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v2

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

# If you wish to specify custom queries, you can do so here or in a config file.

|

||||

# By default, queries listed here will override any specified in a config file.

|

||||

# Prefix the list here with "+" to use these queries and those in the config file.

|

||||

# queries: security-extended,security-and-quality

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v2

|

||||

with:

|

||||

category: "/language:${{matrix.language}}"

|

||||

67

.github/workflows/docker.yaml

vendored

Normal file

67

.github/workflows/docker.yaml

vendored

Normal file

@ -0,0 +1,67 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

# Builds ultralytics/ultralytics:latest images on DockerHub https://hub.docker.com/r/ultralytics

|

||||

|

||||

name: Publish Docker Images

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

|

||||

jobs:

|

||||

docker:

|

||||

if: github.repository == 'ultralytics/ultralytics'

|

||||

name: Push Docker image to Docker Hub

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout repo

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v2

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

- name: Login to Docker Hub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Build and push arm64 image

|

||||

uses: docker/build-push-action@v4

|

||||

continue-on-error: true

|

||||

with:

|

||||

context: .

|

||||

platforms: linux/arm64

|

||||

file: docker/Dockerfile-arm64

|

||||

push: true

|

||||

tags: ultralytics/ultralytics:latest-arm64

|

||||

|

||||

- name: Build and push Jetson image

|

||||

uses: docker/build-push-action@v4

|

||||

continue-on-error: true

|

||||

with:

|

||||

context: .

|

||||

platforms: linux/arm64

|

||||

file: docker/Dockerfile-jetson

|

||||

push: true

|

||||

tags: ultralytics/ultralytics:latest-jetson

|

||||

|

||||

- name: Build and push CPU image

|

||||

uses: docker/build-push-action@v4

|

||||

continue-on-error: true

|

||||

with:

|

||||

context: .

|

||||

file: docker/Dockerfile-cpu

|

||||

push: true

|

||||

tags: ultralytics/ultralytics:latest-cpu

|

||||

|

||||

- name: Build and push GPU image

|

||||

uses: docker/build-push-action@v4

|

||||

continue-on-error: true

|

||||

with:

|

||||

context: .

|

||||

file: docker/Dockerfile

|

||||

push: true

|

||||

tags: ultralytics/ultralytics:latest

|

||||

56

.github/workflows/greetings.yml

vendored

Normal file

56

.github/workflows/greetings.yml

vendored

Normal file

@ -0,0 +1,56 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

|

||||

name: Greetings

|

||||

|

||||

on:

|

||||

pull_request_target:

|

||||

types: [opened]

|

||||

issues:

|

||||

types: [opened]

|

||||

|

||||

jobs:

|

||||

greeting:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/first-interaction@v1

|

||||

with:

|

||||

repo-token: ${{ secrets.GITHUB_TOKEN }}

|

||||

pr-message: |

|

||||

👋 Hello @${{ github.actor }}, thank you for submitting a YOLOv8 🚀 PR! To allow your work to be integrated as seamlessly as possible, we advise you to:

|

||||

|

||||

- ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `main` branch. If your PR is behind you can update your code by clicking the 'Update branch' button or by running `git pull` and `git merge main` locally.

|

||||

- ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

|

||||

- ✅ Update YOLOv8 [Docs](https://docs.ultralytics.com) for any new or updated features.

|

||||

- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

|

||||

|

||||

See our [Contributing Guide](https://docs.ultralytics.com/help/contributing) for details and let us know if you have any questions!

|

||||

|

||||

issue-message: |

|

||||

👋 Hello @${{ github.actor }}, thank you for your interest in YOLOv8 🚀! We recommend a visit to the [YOLOv8 Docs](https://docs.ultralytics.com) for new users where you can find many [Python](https://docs.ultralytics.com/usage/python/) and [CLI](https://docs.ultralytics.com/usage/cli/) usage examples and where many of the most common questions may already be answered.

|

||||

|

||||

If this is a 🐛 Bug Report, please provide a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/) to help us debug it.

|

||||

|

||||

If this is a custom training ❓ Question, please provide as much information as possible, including dataset image examples and training logs, and verify you are following our [Tips for Best Training Results](https://docs.ultralytics.com/yolov5/tutorials/tips_for_best_training_results/).

|

||||

|

||||

## Install

|

||||

|

||||

Pip install the `ultralytics` package including all [requirements](https://github.com/ultralytics/ultralytics/blob/main/requirements.txt) in a [**Python>=3.7**](https://www.python.org/) environment with [**PyTorch>=1.7**](https://pytorch.org/get-started/locally/).

|

||||

|

||||

```bash

|

||||

pip install ultralytics

|

||||

```

|

||||

|

||||

## Environments

|

||||

|

||||

YOLOv8 may be run in any of the following up-to-date verified environments (with all dependencies including [CUDA](https://developer.nvidia.com/cuda)/[CUDNN](https://developer.nvidia.com/cudnn), [Python](https://www.python.org/) and [PyTorch](https://pytorch.org/) preinstalled):

|

||||

|

||||

- **Notebooks** with free GPU: <a href="https://console.paperspace.com/github/ultralytics/ultralytics"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"/></a> <a href="https://colab.research.google.com/github/ultralytics/ultralytics/blob/main/examples/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov8"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

||||

- **Google Cloud** Deep Learning VM. See [GCP Quickstart Guide](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial/)

|

||||

- **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial/)

|

||||

- **Docker Image**. See [Docker Quickstart Guide](https://docs.ultralytics.com/yolov5/environments/docker_image_quickstart_tutorial/) <a href="https://hub.docker.com/r/ultralytics/ultralytics"><img src="https://img.shields.io/docker/pulls/ultralytics/ultralytics?logo=docker" alt="Docker Pulls"></a>

|

||||

|

||||

## Status

|

||||

|

||||

<a href="https://github.com/ultralytics/ultralytics/actions/workflows/ci.yaml?query=event%3Aschedule"><img src="https://github.com/ultralytics/ultralytics/actions/workflows/ci.yaml/badge.svg" alt="Ultralytics CI"></a>

|

||||

|

||||

If this badge is green, all [Ultralytics CI](https://github.com/ultralytics/ultralytics/actions/workflows/ci.yaml?query=event%3Aschedule) tests are currently passing. CI tests verify correct operation of all YOLOv8 [Modes](https://docs.ultralytics.com/modes/) and [Tasks](https://docs.ultralytics.com/tasks/) on macOS, Windows, and Ubuntu every 24 hours and on every commit.

|

||||

40

.github/workflows/links.yml

vendored

Normal file

40

.github/workflows/links.yml

vendored

Normal file

@ -0,0 +1,40 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

# YOLO Continuous Integration (CI) GitHub Actions tests broken link checker

|

||||

# Accept 429(Instagram, 'too many requests'), 999(LinkedIn, 'unknown status code'), Timeout(Twitter)

|

||||

|

||||

name: Check Broken links

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

schedule:

|

||||

- cron: '0 0 * * *' # runs at 00:00 UTC every day

|

||||

|

||||

jobs:

|

||||

Links:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: Download and install lychee

|

||||

run: |

|

||||

LYCHEE_URL=$(curl -s https://api.github.com/repos/lycheeverse/lychee/releases/latest | grep "browser_download_url" | grep "x86_64-unknown-linux-gnu.tar.gz" | cut -d '"' -f 4)

|

||||

curl -L $LYCHEE_URL -o lychee.tar.gz

|

||||

tar xzf lychee.tar.gz

|

||||

sudo mv lychee /usr/local/bin

|

||||

|

||||

- name: Test Markdown and HTML links with retry

|

||||

uses: nick-invision/retry@v2

|

||||

with:

|

||||

timeout_minutes: 5

|

||||

retry_wait_seconds: 60

|

||||

max_attempts: 3

|

||||

command: lychee --accept 429,999 --exclude-loopback --exclude 'https?://(www\.)?(twitter\.com|instagram\.com)' --exclude-path '**/ci.yaml' --exclude-mail --github-token ${{ secrets.GITHUB_TOKEN }} './**/*.md' './**/*.html'

|

||||

|

||||

- name: Test Markdown, HTML, YAML, Python and Notebook links with retry

|

||||

if: github.event_name == 'workflow_dispatch'

|

||||

uses: nick-invision/retry@v2

|

||||

with:

|

||||

timeout_minutes: 5

|

||||

retry_wait_seconds: 60

|

||||

max_attempts: 3

|

||||

command: lychee --accept 429,999 --exclude-loopback --exclude 'https?://(www\.)?(twitter\.com|instagram\.com|url\.com)' --exclude-path '**/ci.yaml' --exclude-mail --github-token ${{ secrets.GITHUB_TOKEN }} './**/*.md' './**/*.html' './**/*.yml' './**/*.yaml' './**/*.py' './**/*.ipynb'

|

||||

110

.github/workflows/publish.yml

vendored

Normal file

110

.github/workflows/publish.yml

vendored

Normal file

@ -0,0 +1,110 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

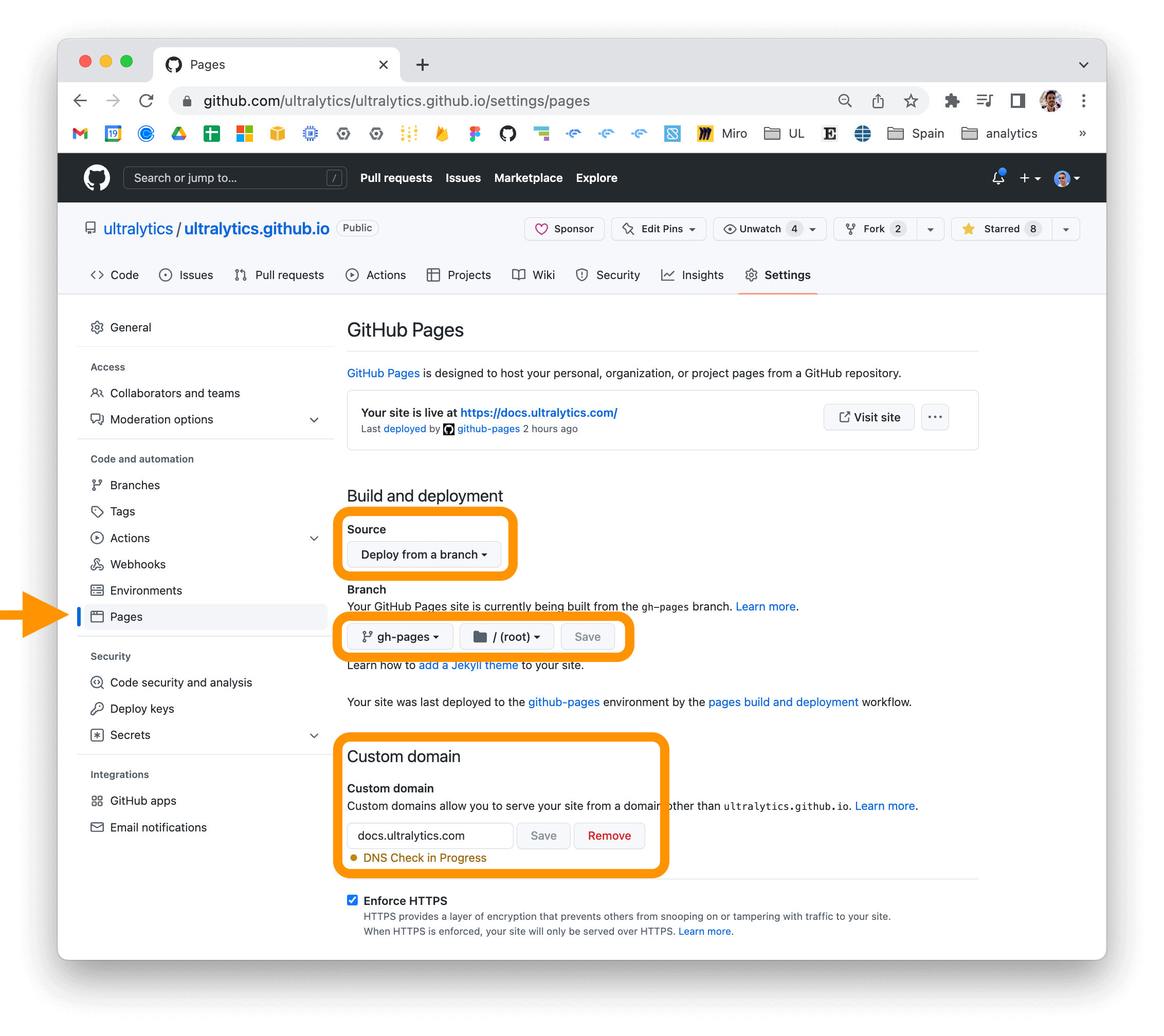

# Publish pip package to PyPI https://pypi.org/project/ultralytics/ and Docs to https://docs.ultralytics.com

|

||||

|

||||

name: Publish to PyPI and Deploy Docs

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

pypi:

|

||||

type: boolean

|

||||

description: Publish to PyPI

|

||||

docs:

|

||||

type: boolean

|

||||

description: Deploy Docs

|

||||

|

||||

jobs:

|

||||

publish:

|

||||

if: github.repository == 'ultralytics/ultralytics' && github.actor == 'glenn-jocher'

|

||||

name: Publish

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: "0" # pulls all commits (needed correct last updated dates in Docs)

|

||||

- name: Set up Python environment

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: '3.10'

|

||||

cache: 'pip' # caching pip dependencies

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip wheel build twine

|

||||

pip install -e '.[dev]' --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

- name: Check PyPI version

|

||||

shell: python

|

||||

run: |

|

||||

import os

|

||||

import pkg_resources as pkg

|

||||

import ultralytics

|

||||

from ultralytics.yolo.utils.checks import check_latest_pypi_version

|

||||

|

||||

v_local = pkg.parse_version(ultralytics.__version__).release

|

||||

v_pypi = pkg.parse_version(check_latest_pypi_version()).release

|

||||

print(f'Local version is {v_local}')

|

||||

print(f'PyPI version is {v_pypi}')

|

||||

d = [a - b for a, b in zip(v_local, v_pypi)] # diff

|

||||

increment = (d[0] == d[1] == 0) and d[2] == 1 # only publish if patch version increments by 1

|

||||

os.system(f'echo "increment={increment}" >> $GITHUB_OUTPUT')

|

||||

os.system(f'echo "version={ultralytics.__version__}" >> $GITHUB_OUTPUT')

|

||||

if increment:

|

||||

print('Local version is higher than PyPI version. Publishing new version to PyPI ✅.')

|

||||

id: check_pypi

|

||||

- name: Publish to PyPI

|

||||

continue-on-error: true

|

||||

if: (github.event_name == 'push' || github.event.inputs.pypi == 'true') && steps.check_pypi.outputs.increment == 'True'

|

||||

env:

|

||||

PYPI_TOKEN: ${{ secrets.PYPI_TOKEN }}

|

||||

run: |

|

||||

python -m build

|

||||

python -m twine upload dist/* -u __token__ -p $PYPI_TOKEN

|

||||

- name: Deploy Docs

|

||||

continue-on-error: true

|

||||

if: (github.event_name == 'push' && steps.check_pypi.outputs.increment == 'True') || github.event.inputs.docs == 'true'

|

||||

env:

|

||||

PERSONAL_ACCESS_TOKEN: ${{ secrets.PERSONAL_ACCESS_TOKEN }}

|

||||

run: |

|

||||

mkdocs build

|

||||

git config --global user.name "Glenn Jocher"

|

||||

git config --global user.email "glenn.jocher@ultralytics.com"

|

||||

git clone https://github.com/ultralytics/docs.git docs-repo

|

||||

cd docs-repo

|

||||

git checkout gh-pages || git checkout -b gh-pages

|

||||

rm -rf *

|

||||

cp -R ../site/* .

|

||||

git add .

|

||||

git commit -m "Update Docs for 'ultralytics ${{ steps.check_pypi.outputs.version }}'"

|

||||

git push https://${{ secrets.PERSONAL_ACCESS_TOKEN }}@github.com/ultralytics/docs.git gh-pages

|

||||

- name: Extract PR Details

|

||||

run: |

|

||||

if [ "${{ github.event_name }}" = "pull_request" ]; then

|

||||

PR_JSON=$(curl -s -H "Authorization: token ${{ secrets.GITHUB_TOKEN }}" https://api.github.com/repos/${{ github.repository }}/pulls/${{ github.event.pull_request.number }})

|

||||

PR_NUMBER=${{ github.event.pull_request.number }}

|

||||

PR_TITLE=$(echo $PR_JSON | jq -r '.title')

|

||||

else

|

||||

COMMIT_SHA=${{ github.event.after }}

|

||||

PR_JSON=$(curl -s -H "Authorization: token ${{ secrets.GITHUB_TOKEN }}" "https://api.github.com/search/issues?q=repo:${{ github.repository }}+is:pr+is:merged+sha:$COMMIT_SHA")

|

||||

PR_NUMBER=$(echo $PR_JSON | jq -r '.items[0].number')

|

||||

PR_TITLE=$(echo $PR_JSON | jq -r '.items[0].title')

|

||||

fi

|

||||

echo "PR_NUMBER=$PR_NUMBER" >> $GITHUB_ENV

|

||||

echo "PR_TITLE=$PR_TITLE" >> $GITHUB_ENV

|

||||

- name: Notify on Slack (Success)

|

||||

if: success() && github.event_name == 'push' && steps.check_pypi.outputs.increment == 'True'

|

||||

uses: slackapi/slack-github-action@v1.23.0

|

||||

with:

|

||||

payload: |

|

||||

{"text": "<!channel> GitHub Actions success for ${{ github.workflow }} ✅\n\n\n*Repository:* https://github.com/${{ github.repository }}\n*Action:* https://github.com/${{ github.repository }}/actions/runs/${{ github.run_id }}\n*Author:* ${{ github.actor }}\n*Event:* NEW 'ultralytics ${{ steps.check_pypi.outputs.version }}' pip package published 😃\n*Job Status:* ${{ job.status }}\n*Pull Request:* <https://github.com/${{ github.repository }}/pull/${{ env.PR_NUMBER }}> ${{ env.PR_TITLE }}\n"}

|

||||

env:

|

||||

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL_YOLO }}

|

||||

- name: Notify on Slack (Failure)

|

||||

if: failure()

|

||||

uses: slackapi/slack-github-action@v1.23.0

|

||||

with:

|

||||

payload: |

|

||||

{"text": "<!channel> GitHub Actions error for ${{ github.workflow }} ❌\n\n\n*Repository:* https://github.com/${{ github.repository }}\n*Action:* https://github.com/${{ github.repository }}/actions/runs/${{ github.run_id }}\n*Author:* ${{ github.actor }}\n*Event:* ${{ github.event_name }}\n*Job Status:* ${{ job.status }}\n*Pull Request:* <https://github.com/${{ github.repository }}/pull/${{ env.PR_NUMBER }}> ${{ env.PR_TITLE }}\n"}

|

||||

env:

|

||||

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL_YOLO }}

|

||||

47

.github/workflows/stale.yml

vendored

Normal file

47

.github/workflows/stale.yml

vendored

Normal file

@ -0,0 +1,47 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

|

||||

name: Close stale issues

|

||||

on:

|

||||

schedule:

|

||||

- cron: '0 0 * * *' # Runs at 00:00 UTC every day

|

||||

|

||||

jobs:

|

||||

stale:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/stale@v8

|

||||

with:

|

||||

repo-token: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

stale-issue-message: |

|

||||

👋 Hello there! We wanted to give you a friendly reminder that this issue has not had any recent activity and may be closed soon, but don't worry - you can always reopen it if needed. If you still have any questions or concerns, please feel free to let us know how we can help.

|

||||

|

||||

For additional resources and information, please see the links below:

|

||||

|

||||

- **Docs**: https://docs.ultralytics.com

|

||||

- **HUB**: https://hub.ultralytics.com

|

||||

- **Community**: https://community.ultralytics.com

|

||||

|

||||

Feel free to inform us of any other **issues** you discover or **feature requests** that come to mind in the future. Pull Requests (PRs) are also always welcomed!

|

||||

|

||||

Thank you for your contributions to YOLO 🚀 and Vision AI ⭐

|

||||

|

||||

stale-pr-message: |

|

||||

👋 Hello there! We wanted to let you know that we've decided to close this pull request due to inactivity. We appreciate the effort you put into contributing to our project, but unfortunately, not all contributions are suitable or aligned with our product roadmap.

|

||||

|

||||

We hope you understand our decision, and please don't let it discourage you from contributing to open source projects in the future. We value all of our community members and their contributions, and we encourage you to keep exploring new projects and ways to get involved.

|

||||

|

||||

For additional resources and information, please see the links below:

|

||||

|

||||

- **Docs**: https://docs.ultralytics.com

|

||||

- **HUB**: https://hub.ultralytics.com

|

||||

- **Community**: https://community.ultralytics.com

|

||||

|

||||

Thank you for your contributions to YOLO 🚀 and Vision AI ⭐

|

||||

|

||||

days-before-issue-stale: 30

|

||||

days-before-issue-close: 10

|

||||

days-before-pr-stale: 90

|

||||

days-before-pr-close: 30

|

||||

exempt-issue-labels: 'documentation,tutorial,TODO'

|

||||

operations-per-run: 300 # The maximum number of operations per run, used to control rate limiting.

|

||||

156

.gitignore

vendored

Normal file

156

.gitignore

vendored

Normal file

@ -0,0 +1,156 @@

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

pip-wheel-metadata/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# Profiling

|

||||

*.pclprof

|

||||

|

||||

# pyenv

|

||||

.python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

.idea

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# datasets and projects

|

||||

datasets/

|

||||

runs/

|

||||

wandb/

|

||||

|

||||

.DS_Store

|

||||

|

||||

# Neural Network weights -----------------------------------------------------------------------------------------------

|

||||

weights/

|

||||

*.weights

|

||||

*.pt

|

||||

*.pb

|

||||

*.onnx

|

||||

*.engine

|

||||

*.mlmodel

|

||||

*.torchscript

|

||||

*.tflite

|

||||

*.h5

|

||||

*_saved_model/

|

||||

*_web_model/

|

||||

*_openvino_model/

|

||||

*_paddle_model/

|

||||

73

.pre-commit-config.yaml

Normal file

73

.pre-commit-config.yaml

Normal file

@ -0,0 +1,73 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

# Pre-commit hooks. For more information see https://github.com/pre-commit/pre-commit-hooks/blob/main/README.md

|

||||

|

||||

exclude: 'docs/'

|

||||

# Define bot property if installed via https://github.com/marketplace/pre-commit-ci

|

||||

ci:

|

||||

autofix_prs: true

|

||||

autoupdate_commit_msg: '[pre-commit.ci] pre-commit suggestions'

|

||||

autoupdate_schedule: monthly

|

||||

# submodules: true

|

||||

|

||||

repos:

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v4.4.0

|

||||

hooks:

|

||||

- id: end-of-file-fixer

|

||||

- id: trailing-whitespace

|

||||

- id: check-case-conflict

|

||||

# - id: check-yaml

|

||||

- id: check-docstring-first

|

||||

- id: double-quote-string-fixer

|

||||

- id: detect-private-key

|

||||

|

||||

- repo: https://github.com/asottile/pyupgrade

|

||||

rev: v3.3.2

|

||||

hooks:

|

||||

- id: pyupgrade

|

||||

name: Upgrade code

|

||||

|

||||

- repo: https://github.com/PyCQA/isort

|

||||

rev: 5.12.0

|

||||

hooks:

|

||||

- id: isort

|

||||

name: Sort imports

|

||||

|

||||

- repo: https://github.com/google/yapf

|

||||

rev: v0.33.0

|

||||

hooks:

|

||||

- id: yapf

|

||||

name: YAPF formatting

|

||||

|

||||

- repo: https://github.com/executablebooks/mdformat

|

||||

rev: 0.7.16

|

||||

hooks:

|

||||

- id: mdformat

|

||||

name: MD formatting

|

||||

additional_dependencies:

|

||||

- mdformat-gfm

|

||||

- mdformat-black

|

||||

# exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

|

||||

|

||||

- repo: https://github.com/PyCQA/flake8

|

||||

rev: 6.0.0

|

||||

hooks:

|

||||

- id: flake8

|

||||

name: PEP8

|

||||

|

||||

- repo: https://github.com/codespell-project/codespell

|

||||

rev: v2.2.4

|

||||

hooks:

|

||||

- id: codespell

|

||||

args:

|

||||

- --ignore-words-list=crate,nd,strack,dota

|

||||

|

||||

# - repo: https://github.com/asottile/yesqa

|

||||

# rev: v1.4.0

|

||||

# hooks:

|

||||

# - id: yesqa

|

||||

|

||||

# - repo: https://github.com/asottile/dead

|

||||

# rev: v1.5.0

|

||||

# hooks:

|

||||

# - id: dead

|

||||

BIN

100_1688009697927.mp4

Normal file

BIN

100_1688009697927.mp4

Normal file

Binary file not shown.

20

CITATION.cff

Normal file

20

CITATION.cff

Normal file

@ -0,0 +1,20 @@

|

||||

cff-version: 1.2.0

|

||||

preferred-citation:

|

||||

type: software

|

||||

message: If you use this software, please cite it as below.

|

||||

authors:

|

||||

- family-names: Jocher

|

||||

given-names: Glenn

|

||||

orcid: "https://orcid.org/0000-0001-5950-6979"

|

||||

- family-names: Chaurasia

|

||||

given-names: Ayush

|

||||

orcid: "https://orcid.org/0000-0002-7603-6750"

|

||||

- family-names: Qiu

|

||||

given-names: Jing

|

||||

orcid: "https://orcid.org/0000-0003-3783-7069"

|

||||

title: "YOLO by Ultralytics"

|

||||

version: 8.0.0

|

||||

# doi: 10.5281/zenodo.3908559 # TODO

|

||||

date-released: 2023-1-10

|

||||

license: AGPL-3.0

|

||||

url: "https://github.com/ultralytics/ultralytics"

|

||||

115

CONTRIBUTING.md

Normal file

115

CONTRIBUTING.md

Normal file

@ -0,0 +1,115 @@

|

||||

## Contributing to YOLOv8 🚀

|

||||

|

||||

We love your input! We want to make contributing to YOLOv8 as easy and transparent as possible, whether it's:

|

||||

|

||||

- Reporting a bug

|

||||

- Discussing the current state of the code

|

||||

- Submitting a fix

|

||||

- Proposing a new feature

|

||||

- Becoming a maintainer

|

||||

|

||||

YOLOv8 works so well due to our combined community effort, and for every small improvement you contribute you will be

|

||||

helping push the frontiers of what's possible in AI 😃!

|

||||

|

||||

## Submitting a Pull Request (PR) 🛠️

|

||||

|

||||

Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

|

||||

|

||||

### 1. Select File to Update

|

||||

|

||||

Select `requirements.txt` to update by clicking on it in GitHub.

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step1" src="https://user-images.githubusercontent.com/26833433/122260847-08be2600-ced4-11eb-828b-8287ace4136c.png"></p>

|

||||

|

||||

### 2. Click 'Edit this file'

|

||||

|

||||

Button is in top-right corner.

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step2" src="https://user-images.githubusercontent.com/26833433/122260844-06f46280-ced4-11eb-9eec-b8a24be519ca.png"></p>

|

||||

|

||||

### 3. Make Changes

|

||||

|

||||

Change `matplotlib` version from `3.2.2` to `3.3`.

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step3" src="https://user-images.githubusercontent.com/26833433/122260853-0a87e980-ced4-11eb-9fd2-3650fb6e0842.png"></p>

|

||||

|

||||

### 4. Preview Changes and Submit PR

|

||||

|

||||

Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

|

||||

for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

|

||||

changes** button. All done, your PR is now submitted to YOLOv8 for review and approval 😃!

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step4" src="https://user-images.githubusercontent.com/26833433/122260856-0b208000-ced4-11eb-8e8e-77b6151cbcc3.png"></p>

|

||||

|

||||

### PR recommendations

|

||||

|

||||

To allow your work to be integrated as seamlessly as possible, we advise you to:

|

||||

|

||||

- ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `main` branch. If your PR is behind you can update

|

||||

your code by clicking the 'Update branch' button or by running `git pull` and `git merge main` locally.

|

||||

|

||||

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 15" src="https://user-images.githubusercontent.com/26833433/187295893-50ed9f44-b2c9-4138-a614-de69bd1753d7.png"></p>

|

||||

|

||||

- ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

|

||||

|

||||

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 03" src="https://user-images.githubusercontent.com/26833433/187296922-545c5498-f64a-4d8c-8300-5fa764360da6.png"></p>

|

||||

|

||||

- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase

|

||||

but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

|

||||

|

||||

### Docstrings

|

||||

|

||||

Not all functions or classes require docstrings but when they do, we

|

||||

follow [google-style docstrings format](https://google.github.io/styleguide/pyguide.html#38-comments-and-docstrings).

|

||||

Here is an example:

|

||||

|

||||

```python

|

||||

"""

|

||||

What the function does. Performs NMS on given detection predictions.

|

||||

|

||||

Args:

|

||||

arg1: The description of the 1st argument

|

||||

arg2: The description of the 2nd argument

|

||||

|

||||

Returns:

|

||||

What the function returns. Empty if nothing is returned.

|

||||

|

||||

Raises:

|

||||

Exception Class: When and why this exception can be raised by the function.

|

||||

"""

|

||||

```

|

||||

|

||||

## Submitting a Bug Report 🐛

|

||||

|

||||

If you spot a problem with YOLOv8 please submit a Bug Report!

|

||||

|

||||

For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few

|

||||

short guidelines below to help users provide what we need in order to get started.

|

||||

|

||||

When asking a question, people will be better able to provide help if you provide **code** that they can easily

|

||||

understand and use to **reproduce** the problem. This is referred to by community members as creating

|

||||

a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/). Your code that reproduces

|

||||

the problem should be:

|

||||

|

||||

- ✅ **Minimal** – Use as little code as possible that still produces the same problem

|

||||

- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

|

||||

- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

|

||||

|

||||

In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

|

||||

should be:

|

||||

|

||||

- ✅ **Current** – Verify that your code is up-to-date with current

|

||||

GitHub [main](https://github.com/ultralytics/ultralytics/tree/main) branch, and if necessary `git pull` or `git clone`

|

||||

a new copy to ensure your problem has not already been resolved by previous commits.

|

||||

- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

|

||||

repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

|

||||

|

||||

If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

|

||||

**Bug Report** [template](https://github.com/ultralytics/ultralytics/issues/new/choose) and providing

|

||||

a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/) to help us better

|

||||

understand and diagnose your problem.

|

||||

|

||||

## License

|

||||

|

||||

By contributing, you agree that your contributions will be licensed under

|

||||

the [AGPL-3.0 license](https://choosealicense.com/licenses/agpl-3.0/)

|

||||

661

LICENSE

Normal file

661

LICENSE

Normal file

@ -0,0 +1,661 @@

|

||||

GNU AFFERO GENERAL PUBLIC LICENSE

|

||||

Version 3, 19 November 2007

|

||||

|

||||

Copyright (C) 2007 Free Software Foundation, Inc. <https://fsf.org/>

|

||||

Everyone is permitted to copy and distribute verbatim copies

|

||||

of this license document, but changing it is not allowed.

|

||||

|

||||

Preamble

|

||||

|

||||

The GNU Affero General Public License is a free, copyleft license for

|

||||

software and other kinds of works, specifically designed to ensure

|

||||

cooperation with the community in the case of network server software.

|

||||

|

||||

The licenses for most software and other practical works are designed

|

||||

to take away your freedom to share and change the works. By contrast,

|

||||

our General Public Licenses are intended to guarantee your freedom to

|

||||

share and change all versions of a program--to make sure it remains free

|

||||

software for all its users.

|

||||

|

||||

When we speak of free software, we are referring to freedom, not

|

||||

price. Our General Public Licenses are designed to make sure that you

|

||||

have the freedom to distribute copies of free software (and charge for

|

||||

them if you wish), that you receive source code or can get it if you

|

||||

want it, that you can change the software or use pieces of it in new

|

||||

free programs, and that you know you can do these things.

|

||||

|

||||

Developers that use our General Public Licenses protect your rights

|

||||

with two steps: (1) assert copyright on the software, and (2) offer

|

||||

you this License which gives you legal permission to copy, distribute

|

||||

and/or modify the software.

|

||||

|

||||

A secondary benefit of defending all users' freedom is that

|

||||

improvements made in alternate versions of the program, if they

|

||||

receive widespread use, become available for other developers to

|

||||

incorporate. Many developers of free software are heartened and

|

||||

encouraged by the resulting cooperation. However, in the case of

|

||||

software used on network servers, this result may fail to come about.

|

||||

The GNU General Public License permits making a modified version and

|

||||

letting the public access it on a server without ever releasing its

|

||||

source code to the public.

|

||||

|

||||

The GNU Affero General Public License is designed specifically to

|

||||

ensure that, in such cases, the modified source code becomes available

|

||||

to the community. It requires the operator of a network server to

|

||||

provide the source code of the modified version running there to the

|

||||

users of that server. Therefore, public use of a modified version, on

|

||||

a publicly accessible server, gives the public access to the source

|

||||

code of the modified version.

|

||||

|

||||

An older license, called the Affero General Public License and

|

||||

published by Affero, was designed to accomplish similar goals. This is

|

||||

a different license, not a version of the Affero GPL, but Affero has

|

||||

released a new version of the Affero GPL which permits relicensing under

|

||||

this license.

|

||||

|

||||

The precise terms and conditions for copying, distribution and

|

||||

modification follow.

|