退购1.1定位算法

This commit is contained in:

7

docs/datasets/classify/caltech101.md

Normal file

7

docs/datasets/classify/caltech101.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/caltech256.md

Normal file

7

docs/datasets/classify/caltech256.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/cifar10.md

Normal file

7

docs/datasets/classify/cifar10.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/cifar100.md

Normal file

7

docs/datasets/classify/cifar100.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/fashion-mnist.md

Normal file

7

docs/datasets/classify/fashion-mnist.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/imagenet.md

Normal file

7

docs/datasets/classify/imagenet.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/imagenet10.md

Normal file

7

docs/datasets/classify/imagenet10.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/imagenette.md

Normal file

7

docs/datasets/classify/imagenette.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/classify/imagewoof.md

Normal file

7

docs/datasets/classify/imagewoof.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

104

docs/datasets/classify/index.md

Normal file

104

docs/datasets/classify/index.md

Normal file

@ -0,0 +1,104 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn how torchvision organizes classification image datasets. Use this code to create and train models. CLI and Python code shown.

|

||||

---

|

||||

|

||||

# Image Classification Datasets Overview

|

||||

|

||||

## Dataset format

|

||||

|

||||

The folder structure for classification datasets in torchvision typically follows a standard format:

|

||||

|

||||

```

|

||||

root/

|

||||

|-- class1/

|

||||

| |-- img1.jpg

|

||||

| |-- img2.jpg

|

||||

| |-- ...

|

||||

|

|

||||

|-- class2/

|

||||

| |-- img1.jpg

|

||||

| |-- img2.jpg

|

||||

| |-- ...

|

||||

|

|

||||

|-- class3/

|

||||

| |-- img1.jpg

|

||||

| |-- img2.jpg

|

||||

| |-- ...

|

||||

|

|

||||

|-- ...

|

||||

```

|

||||

|

||||

In this folder structure, the `root` directory contains one subdirectory for each class in the dataset. Each subdirectory is named after the corresponding class and contains all the images for that class. Each image file is named uniquely and is typically in a common image file format such as JPEG or PNG.

|

||||

|

||||

** Example **

|

||||

|

||||

For example, in the CIFAR10 dataset, the folder structure would look like this:

|

||||

|

||||

```

|

||||

cifar-10-/

|

||||

|

|

||||

|-- train/

|

||||

| |-- airplane/

|

||||

| | |-- 10008_airplane.png

|

||||

| | |-- 10009_airplane.png

|

||||

| | |-- ...

|

||||

| |

|

||||

| |-- automobile/

|

||||

| | |-- 1000_automobile.png

|

||||

| | |-- 1001_automobile.png

|

||||

| | |-- ...

|

||||

| |

|

||||

| |-- bird/

|

||||

| | |-- 10014_bird.png

|

||||

| | |-- 10015_bird.png

|

||||

| | |-- ...

|

||||

| |

|

||||

| |-- ...

|

||||

|

|

||||

|-- test/

|

||||

| |-- airplane/

|

||||

| | |-- 10_airplane.png

|

||||

| | |-- 11_airplane.png

|

||||

| | |-- ...

|

||||

| |

|

||||

| |-- automobile/

|

||||

| | |-- 100_automobile.png

|

||||

| | |-- 101_automobile.png

|

||||

| | |-- ...

|

||||

| |

|

||||

| |-- bird/

|

||||

| | |-- 1000_bird.png

|

||||

| | |-- 1001_bird.png

|

||||

| | |-- ...

|

||||

| |

|

||||

| |-- ...

|

||||

```

|

||||

|

||||

In this example, the `train` directory contains subdirectories for each class in the dataset, and each class subdirectory contains all the images for that class. The `test` directory has a similar structure. The `root` directory also contains other files that are part of the CIFAR10 dataset.

|

||||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='path/to/dataset', epochs=100, imgsz=640)

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=path/to/data model=yolov8n-seg.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Supported Datasets

|

||||

|

||||

TODO

|

||||

7

docs/datasets/classify/mnist.md

Normal file

7

docs/datasets/classify/mnist.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

88

docs/datasets/detect/argoverse.md

Normal file

88

docs/datasets/detect/argoverse.md

Normal file

@ -0,0 +1,88 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the Argoverse dataset, a rich dataset designed to support research in autonomous driving tasks such as 3D tracking, motion forecasting, and stereo depth estimation.

|

||||

---

|

||||

|

||||

# Argoverse Dataset

|

||||

|

||||

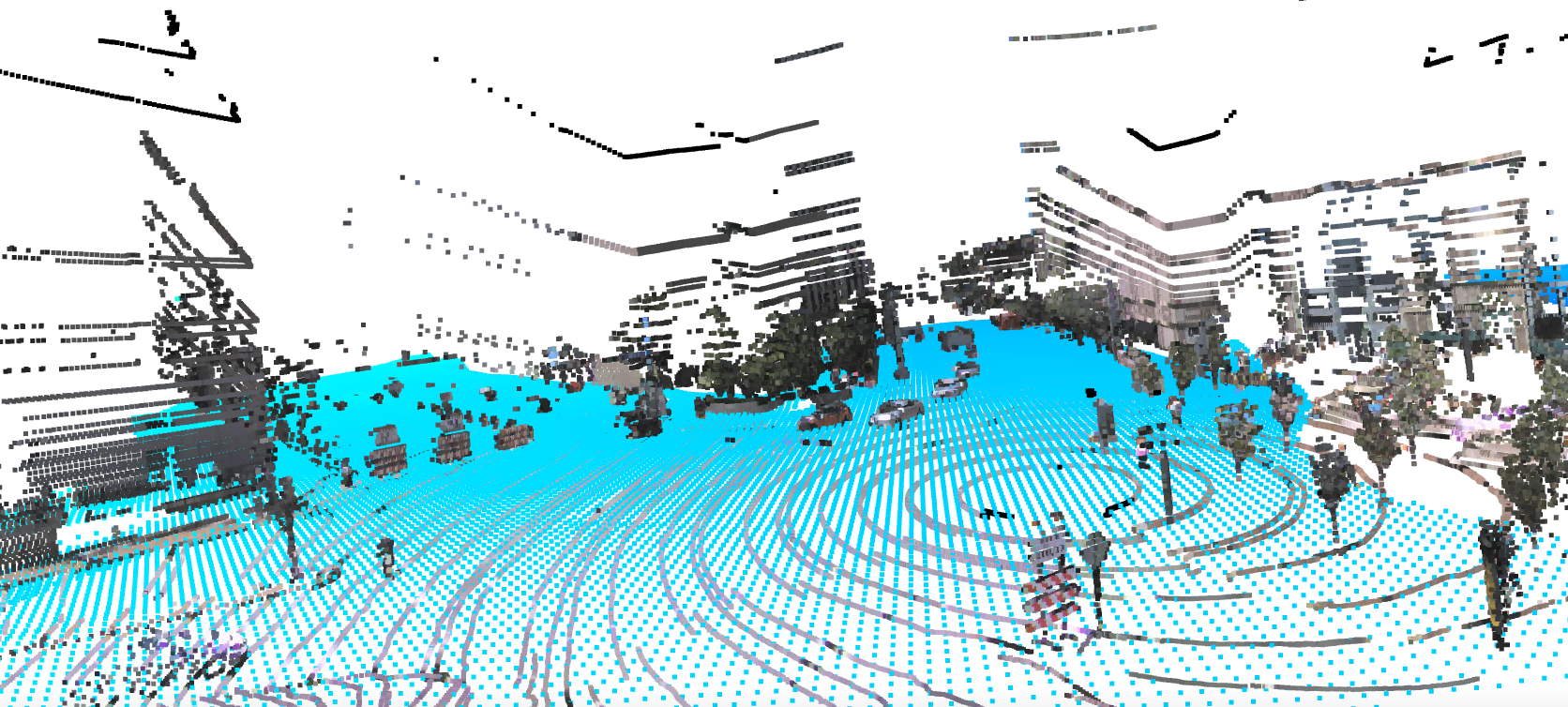

The [Argoverse](https://www.argoverse.org/) dataset is a collection of data designed to support research in autonomous driving tasks, such as 3D tracking, motion forecasting, and stereo depth estimation. Developed by Argo AI, the dataset provides a wide range of high-quality sensor data, including high-resolution images, LiDAR point clouds, and map data.

|

||||

|

||||

## Key Features

|

||||

|

||||

- Argoverse contains over 290K labeled 3D object tracks and 5 million object instances across 1,263 distinct scenes.

|

||||

- The dataset includes high-resolution camera images, LiDAR point clouds, and richly annotated HD maps.

|

||||

- Annotations include 3D bounding boxes for objects, object tracks, and trajectory information.

|

||||

- Argoverse provides multiple subsets for different tasks, such as 3D tracking, motion forecasting, and stereo depth estimation.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The Argoverse dataset is organized into three main subsets:

|

||||

|

||||

1. **Argoverse 3D Tracking**: This subset contains 113 scenes with over 290K labeled 3D object tracks, focusing on 3D object tracking tasks. It includes LiDAR point clouds, camera images, and sensor calibration information.

|

||||

2. **Argoverse Motion Forecasting**: This subset consists of 324K vehicle trajectories collected from 60 hours of driving data, suitable for motion forecasting tasks.

|

||||

3. **Argoverse Stereo Depth Estimation**: This subset is designed for stereo depth estimation tasks and includes over 10K stereo image pairs with corresponding LiDAR point clouds for ground truth depth estimation.

|

||||

|

||||

## Applications

|

||||

|

||||

The Argoverse dataset is widely used for training and evaluating deep learning models in autonomous driving tasks such as 3D object tracking, motion forecasting, and stereo depth estimation. The dataset's diverse set of sensor data, object annotations, and map information make it a valuable resource for researchers and practitioners in the field of autonomous driving.

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the Argoverse dataset, the `Argoverse.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/Argoverse.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/Argoverse.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/Argoverse.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/Argoverse.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the Argoverse dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='Argoverse.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=Argoverse.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Data and Annotations

|

||||

|

||||

The Argoverse dataset contains a diverse set of sensor data, including camera images, LiDAR point clouds, and HD map information, providing rich context for autonomous driving tasks. Here are some examples of data from the dataset, along with their corresponding annotations:

|

||||

|

||||

|

||||

|

||||

- **Argoverse 3D Tracking**: This image demonstrates an example of 3D object tracking, where objects are annotated with 3D bounding boxes. The dataset provides LiDAR point clouds and camera images to facilitate the development of models for this task.

|

||||

|

||||

The example showcases the variety and complexity of the data in the Argoverse dataset and highlights the importance of high-quality sensor data for autonomous driving tasks.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the Argoverse dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@inproceedings{chang2019argoverse,

|

||||

title={Argoverse: 3D Tracking and Forecasting with Rich Maps},

|

||||

author={Chang, Ming-Fang and Lambert, John and Sangkloy, Patsorn and Singh, Jagjeet and Bak, Slawomir and Hartnett, Andrew and Wang, Dequan and Carr, Peter and Lucey, Simon and Ramanan, Deva and others},

|

||||

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

|

||||

pages={8748--8757},

|

||||

year={2019}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge Argo AI for creating and maintaining the Argoverse dataset as a valuable resource for the autonomous driving research community. For more information about the Argoverse dataset and its creators, visit the [Argoverse dataset website](https://www.argoverse.org/).

|

||||

89

docs/datasets/detect/coco.md

Normal file

89

docs/datasets/detect/coco.md

Normal file

@ -0,0 +1,89 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the COCO dataset, designed to encourage research on object detection, segmentation, and captioning with standardized evaluation metrics.

|

||||

---

|

||||

|

||||

# COCO Dataset

|

||||

|

||||

The [COCO](https://cocodataset.org/#home) (Common Objects in Context) dataset is a large-scale object detection, segmentation, and captioning dataset. It is designed to encourage research on a wide variety of object categories and is commonly used for benchmarking computer vision models. It is an essential dataset for researchers and developers working on object detection, segmentation, and pose estimation tasks.

|

||||

|

||||

## Key Features

|

||||

|

||||

- COCO contains 330K images, with 200K images having annotations for object detection, segmentation, and captioning tasks.

|

||||

- The dataset comprises 80 object categories, including common objects like cars, bicycles, and animals, as well as more specific categories such as umbrellas, handbags, and sports equipment.

|

||||

- Annotations include object bounding boxes, segmentation masks, and captions for each image.

|

||||

- COCO provides standardized evaluation metrics like mean Average Precision (mAP) for object detection, and mean Average Recall (mAR) for segmentation tasks, making it suitable for comparing model performance.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The COCO dataset is split into three subsets:

|

||||

|

||||

1. **Train2017**: This subset contains 118K images for training object detection, segmentation, and captioning models.

|

||||

2. **Val2017**: This subset has 5K images used for validation purposes during model training.

|

||||

3. **Test2017**: This subset consists of 20K images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [COCO evaluation server](https://competitions.codalab.org/competitions/5181) for performance evaluation.

|

||||

|

||||

## Applications

|

||||

|

||||

The COCO dataset is widely used for training and evaluating deep learning models in object detection (such as YOLO, Faster R-CNN, and SSD), instance segmentation (such as Mask R-CNN), and keypoint detection (such as OpenPose). The dataset's diverse set of object categories, large number of annotated images, and standardized evaluation metrics make it an essential resource for computer vision researchers and practitioners.

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO dataset, the `coco.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/coco.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/coco.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the COCO dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

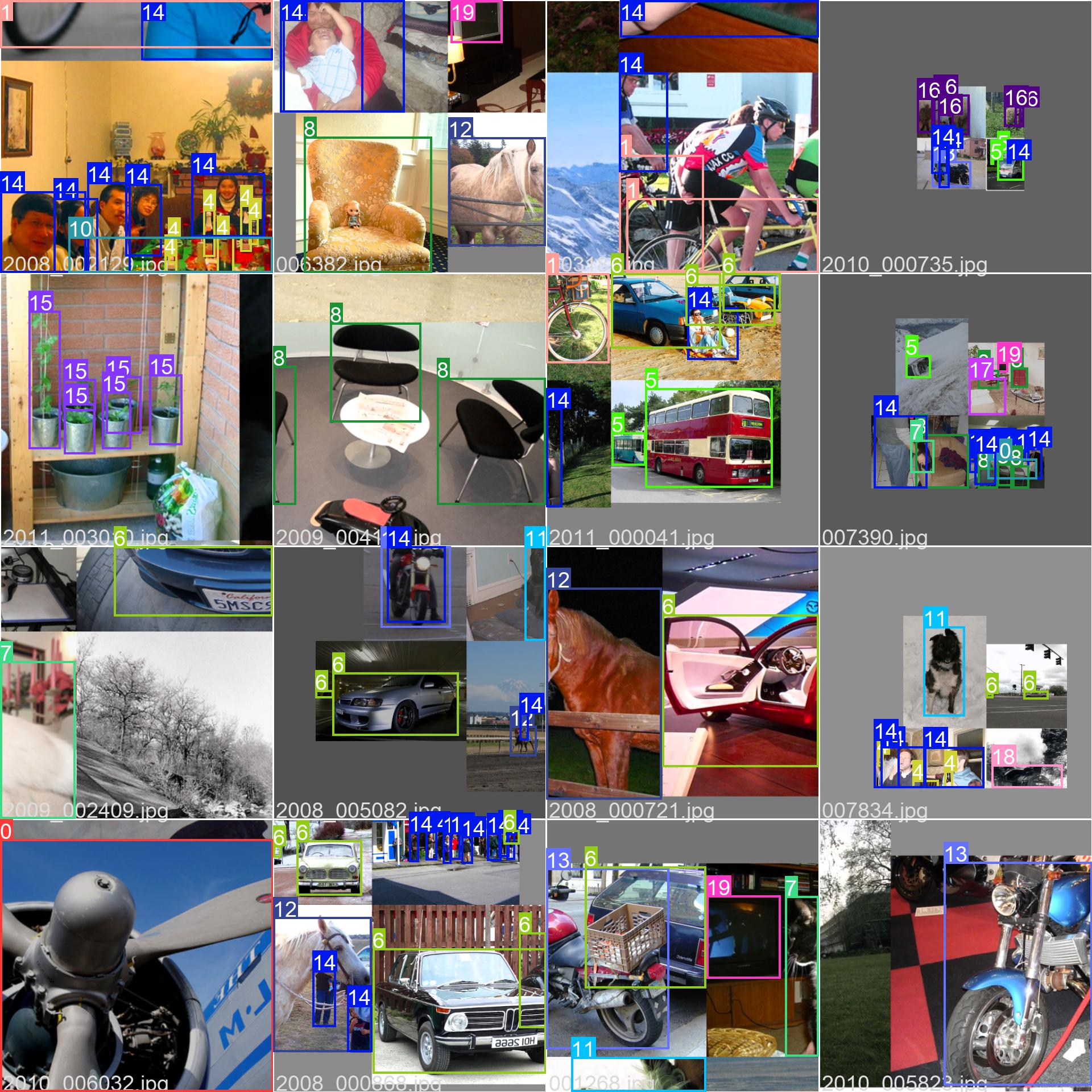

The COCO dataset contains a diverse set of images with various object categories and complex scenes. Here are some examples of images from the dataset, along with their corresponding annotations:

|

||||

|

||||

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the COCO dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@misc{lin2015microsoft,

|

||||

title={Microsoft COCO: Common Objects in Context},

|

||||

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

|

||||

year={2015},

|

||||

eprint={1405.0312},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

|

||||

79

docs/datasets/detect/coco8.md

Normal file

79

docs/datasets/detect/coco8.md

Normal file

@ -0,0 +1,79 @@

|

||||

---

|

||||

comments: true

|

||||

description: Get started with Ultralytics COCO8. Ideal for testing and debugging object detection models or experimenting with new detection approaches.

|

||||

---

|

||||

|

||||

# COCO8 Dataset

|

||||

|

||||

## Introduction

|

||||

|

||||

[Ultralytics](https://ultralytics.com) COCO8 is a small, but versatile object detection dataset composed of the first 8

|

||||

images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and debugging

|

||||

object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough to be

|

||||

easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

|

||||

larger datasets.

|

||||

|

||||

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

||||

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8 dataset, the `coco8.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/coco8.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/coco8.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the COCO8 dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco8.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco8.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

Here are some examples of images from the COCO8 dataset, along with their corresponding annotations:

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/26833433/236818348-e6260a3d-0454-436b-83a9-de366ba07235.jpg" alt="Dataset sample image" width="800">

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the COCO8 dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@misc{lin2015microsoft,

|

||||

title={Microsoft COCO: Common Objects in Context},

|

||||

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

|

||||

year={2015},

|

||||

eprint={1405.0312},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

|

||||

86

docs/datasets/detect/globalwheat2020.md

Normal file

86

docs/datasets/detect/globalwheat2020.md

Normal file

@ -0,0 +1,86 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the Global Wheat Head Dataset, aimed at supporting the development of accurate wheat head models for applications in wheat phenotyping and crop management.

|

||||

---

|

||||

|

||||

# Global Wheat Head Dataset

|

||||

|

||||

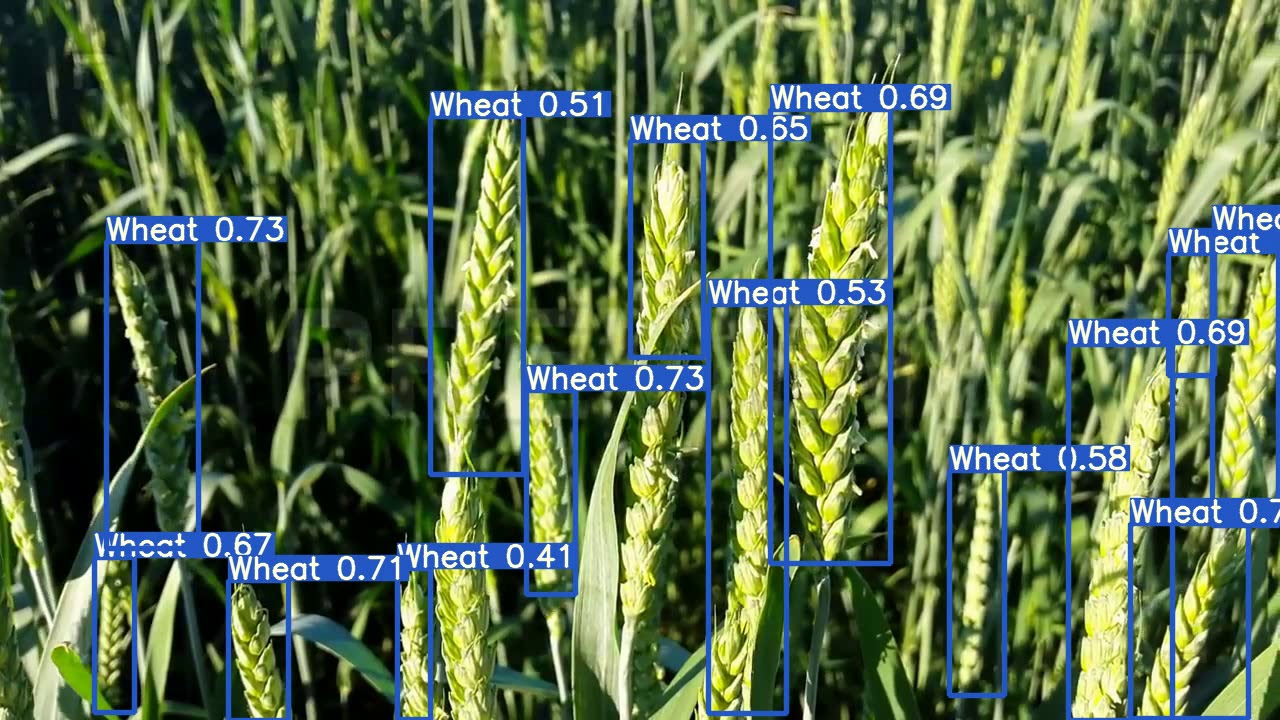

The [Global Wheat Head Dataset](http://www.global-wheat.com/) is a collection of images designed to support the development of accurate wheat head detection models for applications in wheat phenotyping and crop management. Wheat heads, also known as spikes, are the grain-bearing parts of the wheat plant. Accurate estimation of wheat head density and size is essential for assessing crop health, maturity, and yield potential. The dataset, created by a collaboration of nine research institutes from seven countries, covers multiple growing regions to ensure models generalize well across different environments.

|

||||

|

||||

## Key Features

|

||||

|

||||

- The dataset contains over 3,000 training images from Europe (France, UK, Switzerland) and North America (Canada).

|

||||

- It includes approximately 1,000 test images from Australia, Japan, and China.

|

||||

- Images are outdoor field images, capturing the natural variability in wheat head appearances.

|

||||

- Annotations include wheat head bounding boxes to support object detection tasks.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The Global Wheat Head Dataset is organized into two main subsets:

|

||||

|

||||

1. **Training Set**: This subset contains over 3,000 images from Europe and North America. The images are labeled with wheat head bounding boxes, providing ground truth for training object detection models.

|

||||

2. **Test Set**: This subset consists of approximately 1,000 images from Australia, Japan, and China. These images are used for evaluating the performance of trained models on unseen genotypes, environments, and observational conditions.

|

||||

|

||||

## Applications

|

||||

|

||||

The Global Wheat Head Dataset is widely used for training and evaluating deep learning models in wheat head detection tasks. The dataset's diverse set of images, capturing a wide range of appearances, environments, and conditions, make it a valuable resource for researchers and practitioners in the field of plant phenotyping and crop management.

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the Global Wheat Head Dataset, the `GlobalWheat2020.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/GlobalWheat2020.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/GlobalWheat2020.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/GlobalWheat2020.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/GlobalWheat2020.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the Global Wheat Head Dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='GlobalWheat2020.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=GlobalWheat2020.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Data and Annotations

|

||||

|

||||

The Global Wheat Head Dataset contains a diverse set of outdoor field images, capturing the natural variability in wheat head appearances, environments, and conditions. Here are some examples of data from the dataset, along with their corresponding annotations:

|

||||

|

||||

|

||||

|

||||

- **Wheat Head Detection**: This image demonstrates an example of wheat head detection, where wheat heads are annotated with bounding boxes. The dataset provides a variety of images to facilitate the development of models for this task.

|

||||

|

||||

The example showcases the variety and complexity of the data in the Global Wheat Head Dataset and highlights the importance of accurate wheat head detection for applications in wheat phenotyping and crop management.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the Global Wheat Head Dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@article{david2020global,

|

||||

title={Global Wheat Head Detection (GWHD) Dataset: A Large and Diverse Dataset of High-Resolution RGB-Labelled Images to Develop and Benchmark Wheat Head Detection Methods},

|

||||

author={David, Etienne and Madec, Simon and Sadeghi-Tehran, Pouria and Aasen, Helge and Zheng, Bangyou and Liu, Shouyang and Kirchgessner, Norbert and Ishikawa, Goro and Nagasawa, Koichi and Badhon, Minhajul and others},

|

||||

journal={arXiv preprint arXiv:2005.02162},

|

||||

year={2020}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the researchers and institutions that contributed to the creation and maintenance of the Global Wheat Head Dataset as a valuable resource for the plant phenotyping and crop management research community. For more information about the dataset and its creators, visit the [Global Wheat Head Dataset website](http://www.global-wheat.com/).

|

||||

111

docs/datasets/detect/index.md

Normal file

111

docs/datasets/detect/index.md

Normal file

@ -0,0 +1,111 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about supported dataset formats for training YOLO detection models, including Ultralytics YOLO and COCO, in this Object Detection Datasets Overview.

|

||||

---

|

||||

|

||||

# Object Detection Datasets Overview

|

||||

|

||||

## Supported Dataset Formats

|

||||

|

||||

### Ultralytics YOLO format

|

||||

|

||||

** Label Format **

|

||||

|

||||

The dataset format used for training YOLO detection models is as follows:

|

||||

|

||||

1. One text file per image: Each image in the dataset has a corresponding text file with the same name as the image file and the ".txt" extension.

|

||||

2. One row per object: Each row in the text file corresponds to one object instance in the image.

|

||||

3. Object information per row: Each row contains the following information about the object instance:

|

||||

- Object class index: An integer representing the class of the object (e.g., 0 for person, 1 for car, etc.).

|

||||

- Object center coordinates: The x and y coordinates of the center of the object, normalized to be between 0 and 1.

|

||||

- Object width and height: The width and height of the object, normalized to be between 0 and 1.

|

||||

|

||||

The format for a single row in the detection dataset file is as follows:

|

||||

|

||||

```

|

||||

<object-class> <x> <y> <width> <height>

|

||||

```

|

||||

|

||||

Here is an example of the YOLO dataset format for a single image with two object instances:

|

||||

|

||||

```

|

||||

0 0.5 0.4 0.3 0.6

|

||||

1 0.3 0.7 0.4 0.2

|

||||

```

|

||||

|

||||

In this example, the first object is of class 0 (person), with its center at (0.5, 0.4), width of 0.3, and height of 0.6. The second object is of class 1 (car), with its center at (0.3, 0.7), width of 0.4, and height of 0.2.

|

||||

|

||||

** Dataset file format **

|

||||

|

||||

The Ultralytics framework uses a YAML file format to define the dataset and model configuration for training Detection Models. Here is an example of the YAML format used for defining a detection dataset:

|

||||

|

||||

```

|

||||

train: <path-to-training-images>

|

||||

val: <path-to-validation-images>

|

||||

|

||||

nc: <number-of-classes>

|

||||

names: [<class-1>, <class-2>, ..., <class-n>]

|

||||

|

||||

```

|

||||

|

||||

The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

|

||||

|

||||

The `nc` field specifies the number of object classes in the dataset.

|

||||

|

||||

The `names` field is a list of the names of the object classes. The order of the names should match the order of the object class indices in the YOLO dataset files.

|

||||

|

||||

NOTE: Either `nc` or `names` must be defined. Defining both are not mandatory

|

||||

|

||||

Alternatively, you can directly define class names like this:

|

||||

|

||||

```yaml

|

||||

names:

|

||||

0: person

|

||||

1: bicycle

|

||||

```

|

||||

|

||||

** Example **

|

||||

|

||||

```yaml

|

||||

train: data/train/

|

||||

val: data/val/

|

||||

|

||||

nc: 2

|

||||

names: ['person', 'car']

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco128.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco128.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Supported Datasets

|

||||

|

||||

TODO

|

||||

|

||||

## Port or Convert label formats

|

||||

|

||||

### COCO dataset format to YOLO format

|

||||

|

||||

```

|

||||

from ultralytics.yolo.data.converter import convert_coco

|

||||

|

||||

convert_coco(labels_dir='../coco/annotations/')

|

||||

```

|

||||

7

docs/datasets/detect/objects365.md

Normal file

7

docs/datasets/detect/objects365.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/detect/sku-110k.md

Normal file

7

docs/datasets/detect/sku-110k.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

7

docs/datasets/detect/visdrone.md

Normal file

7

docs/datasets/detect/visdrone.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

90

docs/datasets/detect/voc.md

Normal file

90

docs/datasets/detect/voc.md

Normal file

@ -0,0 +1,90 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the VOC dataset, designed to encourage research on object detection, segmentation, and classification with standardized evaluation metrics.

|

||||

---

|

||||

|

||||

# VOC Dataset

|

||||

|

||||

The [PASCAL VOC](http://host.robots.ox.ac.uk/pascal/VOC/) (Visual Object Classes) dataset is a well-known object detection, segmentation, and classification dataset. It is designed to encourage research on a wide variety of object categories and is commonly used for benchmarking computer vision models. It is an essential dataset for researchers and developers working on object detection, segmentation, and classification tasks.

|

||||

|

||||

## Key Features

|

||||

|

||||

- VOC dataset includes two main challenges: VOC2007 and VOC2012.

|

||||

- The dataset comprises 20 object categories, including common objects like cars, bicycles, and animals, as well as more specific categories such as boats, sofas, and dining tables.

|

||||

- Annotations include object bounding boxes and class labels for object detection and classification tasks, and segmentation masks for the segmentation tasks.

|

||||

- VOC provides standardized evaluation metrics like mean Average Precision (mAP) for object detection and classification, making it suitable for comparing model performance.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The VOC dataset is split into three subsets:

|

||||

|

||||

1. **Train**: This subset contains images for training object detection, segmentation, and classification models.

|

||||

2. **Validation**: This subset has images used for validation purposes during model training.

|

||||

3. **Test**: This subset consists of images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [PASCAL VOC evaluation server](http://host.robots.ox.ac.uk:8080/leaderboard/displaylb.php) for performance evaluation.

|

||||

|

||||

## Applications

|

||||

|

||||

The VOC dataset is widely used for training and evaluating deep learning models in object detection (such as YOLO, Faster R-CNN, and SSD), instance segmentation (such as Mask R-CNN), and image classification. The dataset's diverse set of object categories, large number of annotated images, and standardized evaluation metrics make it an essential resource for computer vision researchers and practitioners.

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the VOC dataset, the `VOC.yaml` file should be created and maintained.

|

||||

|

||||

!!! example "ultralytics/datasets/VOC.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/VOC.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the VOC dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='VOC.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from

|

||||

a pretrained *.pt model

|

||||

yolo detect train data=VOC.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

The VOC dataset contains a diverse set of images with various object categories and complex scenes. Here are some examples of images from the dataset, along with their corresponding annotations:

|

||||

|

||||

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the VOC dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the VOC dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@misc{everingham2010pascal,

|

||||

title={The PASCAL Visual Object Classes (VOC) Challenge},

|

||||

author={Mark Everingham and Luc Van Gool and Christopher K. I. Williams and John Winn and Andrew Zisserman},

|

||||

year={2010},

|

||||

eprint={0909.5206},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the PASCAL VOC Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the VOC dataset and its creators, visit the [PASCAL VOC dataset website](http://host.robots.ox.ac.uk/pascal/VOC/).

|

||||

7

docs/datasets/detect/xview.md

Normal file

7

docs/datasets/detect/xview.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

58

docs/datasets/index.md

Normal file

58

docs/datasets/index.md

Normal file

@ -0,0 +1,58 @@

|

||||

---

|

||||

comments: true

|

||||

description: Ultralytics provides support for various datasets to facilitate multiple computer vision tasks. Check out our list of main datasets and their summaries.

|

||||

---

|

||||

|

||||

# Datasets Overview

|

||||

|

||||

Ultralytics provides support for various datasets to facilitate computer vision tasks such as detection, instance segmentation, pose estimation, classification, and multi-object tracking. Below is a list of the main Ultralytics datasets, followed by a summary of each computer vision task and the respective datasets.

|

||||

|

||||

## [Detection Datasets](detect/index.md)

|

||||

|

||||

Bounding box object detection is a computer vision technique that involves detecting and localizing objects in an image by drawing a bounding box around each object.

|

||||

|

||||

* [Argoverse](detect/argoverse.md): A dataset containing 3D tracking and motion forecasting data from urban environments with rich annotations.

|

||||

* [COCO](detect/coco.md): A large-scale dataset designed for object detection, segmentation, and captioning with over 200K labeled images.

|

||||

* [COCO8](detect/coco8.md): Contains the first 4 images from COCO train and COCO val, suitable for quick tests.

|

||||

* [Global Wheat 2020](detect/globalwheat2020.md): A dataset of wheat head images collected from around the world for object detection and localization tasks.

|

||||

* [Objects365](detect/objects365.md): A high-quality, large-scale dataset for object detection with 365 object categories and over 600K annotated images.

|

||||

* [SKU-110K](detect/sku-110k.md): A dataset featuring dense object detection in retail environments with over 11K images and 1.7 million bounding boxes.

|

||||

* [VisDrone](detect/visdrone.md): A dataset containing object detection and multi-object tracking data from drone-captured imagery with over 10K images and video sequences.

|

||||

* [VOC](detect/voc.md): The Pascal Visual Object Classes (VOC) dataset for object detection and segmentation with 20 object classes and over 11K images.

|

||||

* [xView](detect/xview.md): A dataset for object detection in overhead imagery with 60 object categories and over 1 million annotated objects.

|

||||

|

||||

## [Instance Segmentation Datasets](segment/index.md)

|

||||

|

||||

Instance segmentation is a computer vision technique that involves identifying and localizing objects in an image at the pixel level.

|

||||

|

||||

* [COCO](segment/coco.md): A large-scale dataset designed for object detection, segmentation, and captioning tasks with over 200K labeled images.

|

||||

* [COCO8-seg](segment/coco8-seg.md): A smaller dataset for instance segmentation tasks, containing a subset of 8 COCO images with segmentation annotations.

|

||||

|

||||

## [Pose Estimation](pose/index.md)

|

||||

|

||||

Pose estimation is a technique used to determine the pose of the object relative to the camera or the world coordinate system.

|

||||

|

||||

* [COCO](pose/coco.md): A large-scale dataset with human pose annotations designed for pose estimation tasks.

|

||||

* [COCO8-pose](pose/coco8-pose.md): A smaller dataset for pose estimation tasks, containing a subset of 8 COCO images with human pose annotations.

|

||||

|

||||

## [Classification](classify/index.md)

|

||||

|

||||

Image classification is a computer vision task that involves categorizing an image into one or more predefined classes or categories based on its visual content.

|

||||

|

||||

* [Caltech 101](classify/caltech101.md): A dataset containing images of 101 object categories for image classification tasks.

|

||||

* [Caltech 256](classify/caltech256.md): An extended version of Caltech 101 with 256 object categories and more challenging images.

|

||||

* [CIFAR-10](classify/cifar10.md): A dataset of 60K 32x32 color images in 10 classes, with 6K images per class.

|

||||

* [CIFAR-100](classify/cifar100.md): An extended version of CIFAR-10 with 100 object categories and 600 images per class.

|

||||

* [Fashion-MNIST](classify/fashion-mnist.md): A dataset consisting of 70,000 grayscale images of 10 fashion categories for image classification tasks.

|

||||

* [ImageNet](classify/imagenet.md): A large-scale dataset for object detection and image classification with over 14 million images and 20,000 categories.

|

||||

* [ImageNet-10](classify/imagenet10.md): A smaller subset of ImageNet with 10 categories for faster experimentation and testing.

|

||||

* [Imagenette](classify/imagenette.md): A smaller subset of ImageNet that contains 10 easily distinguishable classes for quicker training and testing.

|

||||

* [Imagewoof](classify/imagewoof.md): A more challenging subset of ImageNet containing 10 dog breed categories for image classification tasks.

|

||||

* [MNIST](classify/mnist.md): A dataset of 70,000 grayscale images of handwritten digits for image classification tasks.

|

||||

|

||||

## [Multi-Object Tracking](track/index.md)

|

||||

|

||||

Multi-object tracking is a computer vision technique that involves detecting and tracking multiple objects over time in a video sequence.

|

||||

|

||||

* [Argoverse](detect/argoverse.md): A dataset containing 3D tracking and motion forecasting data from urban environments with rich annotations for multi-object tracking tasks.

|

||||

* [VisDrone](detect/visdrone.md): A dataset containing object detection and multi-object tracking data from drone-captured imagery with over 10K images and video sequences.

|

||||

7

docs/datasets/pose/coco.md

Normal file

7

docs/datasets/pose/coco.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

79

docs/datasets/pose/coco8-pose.md

Normal file

79

docs/datasets/pose/coco8-pose.md

Normal file

@ -0,0 +1,79 @@

|

||||

---

|

||||

comments: true

|

||||

description: Test and debug object detection models with Ultralytics COCO8-Pose Dataset - a small, versatile pose detection dataset with 8 images.

|

||||

---

|

||||

|

||||

# COCO8-Pose Dataset

|

||||

|

||||

## Introduction

|

||||

|

||||

[Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first

|

||||

8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

|

||||

debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough

|

||||

to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before

|

||||

training larger datasets.

|

||||

|

||||

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

||||

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Pose dataset, the `coco8-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/coco8-pose.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/coco8-pose.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco8-pose.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

Here are some examples of images from the COCO8-Pose dataset, along with their corresponding annotations:

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/26833433/236818283-52eecb96-fc6a-420d-8a26-d488b352dd4c.jpg" alt="Dataset sample image" width="800">

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the COCO8-Pose dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@misc{lin2015microsoft,

|

||||

title={Microsoft COCO: Common Objects in Context},

|

||||

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

|

||||

year={2015},

|

||||

eprint={1405.0312},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

|

||||

124

docs/datasets/pose/index.md

Normal file

124

docs/datasets/pose/index.md

Normal file

@ -0,0 +1,124 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn how to format your dataset for training YOLO models with Ultralytics YOLO format using our concise tutorial and example YAML files.

|

||||

---

|

||||

|

||||

# Pose Estimation Datasets Overview

|

||||

|

||||

## Supported Dataset Formats

|

||||

|

||||

### Ultralytics YOLO format

|

||||

|

||||

** Label Format **

|

||||

|

||||

The dataset format used for training YOLO segmentation models is as follows:

|

||||

|

||||

1. One text file per image: Each image in the dataset has a corresponding text file with the same name as the image file and the ".txt" extension.

|

||||

2. One row per object: Each row in the text file corresponds to one object instance in the image.

|

||||

3. Object information per row: Each row contains the following information about the object instance:

|

||||

- Object class index: An integer representing the class of the object (e.g., 0 for person, 1 for car, etc.).

|

||||

- Object center coordinates: The x and y coordinates of the center of the object, normalized to be between 0 and 1.

|

||||

- Object width and height: The width and height of the object, normalized to be between 0 and 1.

|

||||

- Object keypoint coordinates: The keypoints of the object, normalized to be between 0 and 1.

|

||||

|

||||

Here is an example of the label format for pose estimation task:

|

||||

|

||||

Format with Dim = 2

|

||||

|

||||

```

|

||||

<class-index> <x> <y> <width> <height> <px1> <py1> <px2> <py2> ... <pxn> <pyn>

|

||||

```

|

||||

|

||||

Format with Dim = 3

|

||||

|

||||

```

|

||||

<class-index> <x> <y> <width> <height> <px1> <py1> <p1-visibility> <px2> <py2> <p2-visibility> <pxn> <pyn> <p2-visibility>

|

||||

```

|

||||

|

||||

In this format, `<class-index>` is the index of the class for the object,`<x> <y> <width> <height>` are coordinates of boudning box, and `<px1> <py1> <px2> <py2> ... <pxn> <pyn>` are the pixel coordinates of the keypoints. The coordinates are separated by spaces.

|

||||

|

||||

** Dataset file format **

|

||||

|

||||

The Ultralytics framework uses a YAML file format to define the dataset and model configuration for training Detection Models. Here is an example of the YAML format used for defining a detection dataset:

|

||||

|

||||

```yaml

|

||||

train: <path-to-training-images>

|

||||

val: <path-to-validation-images>

|

||||

|

||||

nc: <number-of-classes>

|

||||

names: [<class-1>, <class-2>, ..., <class-n>]

|

||||

|

||||

# Keypoints

|

||||

kpt_shape: [num_kpts, dim] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

|

||||

flip_idx: [n1, n2 ... , n(num_kpts)]

|

||||

|

||||

```

|

||||

|

||||

The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

|

||||

|

||||

The `nc` field specifies the number of object classes in the dataset.

|

||||

|

||||

The `names` field is a list of the names of the object classes. The order of the names should match the order of the object class indices in the YOLO dataset files.

|

||||

|

||||

NOTE: Either `nc` or `names` must be defined. Defining both are not mandatory

|

||||

|

||||

Alternatively, you can directly define class names like this:

|

||||

|

||||

```

|

||||

names:

|

||||

0: person

|

||||

1: bicycle

|

||||

```

|

||||

|

||||

(Optional) if the points are symmetric then need flip_idx, like left-right side of human or face.

|

||||

For example let's say there're five keypoints of facial landmark: [left eye, right eye, nose, left point of mouth, right point of mouse], and the original index is [0, 1, 2, 3, 4], then flip_idx is [1, 0, 2, 4, 3].(just exchange the left-right index, i.e 0-1 and 3-4, and do not modify others like nose in this example)

|

||||

|

||||

** Example **

|

||||

|

||||

```yaml

|

||||

train: data/train/

|

||||

val: data/val/

|

||||

|

||||

nc: 2

|

||||

names: ['person', 'car']

|

||||

|

||||

# Keypoints

|

||||

kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

|

||||

flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco128-pose.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco128-pose.yaml model=yolov8n-pose.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Supported Datasets

|

||||

|

||||

TODO

|

||||

|

||||

## Port or Convert label formats

|

||||

|

||||

### COCO dataset format to YOLO format

|

||||

|

||||

```

|

||||

from ultralytics.yolo.data.converter import convert_coco

|

||||

|

||||

convert_coco(labels_dir='../coco/annotations/', use_keypoints=True)

|

||||

```

|

||||

7

docs/datasets/segment/coco.md

Normal file

7

docs/datasets/segment/coco.md

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

79

docs/datasets/segment/coco8-seg.md

Normal file

79

docs/datasets/segment/coco8-seg.md

Normal file

@ -0,0 +1,79 @@

|

||||

---

|

||||

comments: true

|

||||

description: Test and debug segmentation models on small, versatile COCO8-Seg instance segmentation dataset, now available for use with YOLOv8 and Ultralytics HUB.

|

||||

---

|

||||

|

||||

# COCO8-Seg Dataset

|

||||

|

||||

## Introduction

|

||||

|

||||

[Ultralytics](https://ultralytics.com) COCO8-Seg is a small, but versatile instance segmentation dataset composed of the

|

||||

first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

|

||||

debugging segmentation models, or for experimenting with new detection approaches. With 8 images, it is small enough to

|

||||

be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

|

||||

larger datasets.

|

||||

|

||||

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

||||

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Seg dataset, the `coco8-seg.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-seg.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-seg.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/coco8-seg.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/coco8-seg.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the COCO8-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco8-seg.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco8-seg.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

Here are some examples of images from the COCO8-Seg dataset, along with their corresponding annotations:

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/26833433/236818387-f7bde7df-caaa-46d1-8341-1f7504cd11a1.jpg" alt="Dataset sample image" width="800">

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the COCO8-Seg dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@misc{lin2015microsoft,

|

||||

title={Microsoft COCO: Common Objects in Context},

|

||||

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

|

||||

year={2015},

|

||||

eprint={1405.0312},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

|

||||

136

docs/datasets/segment/index.md

Normal file

136

docs/datasets/segment/index.md

Normal file

@ -0,0 +1,136 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the Ultralytics YOLO dataset format for segmentation models. Use YAML to train Detection Models. Convert COCO to YOLO format using Python.

|

||||

---

|

||||

|

||||