mirror of

https://gitee.com/nanjing-yimao-information/ieemoo-ai-gift.git

synced 2025-08-23 23:50:25 +00:00

update

This commit is contained in:

181

docs/en/tasks/classify.md

Normal file

181

docs/en/tasks/classify.md

Normal file

@ -0,0 +1,181 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about YOLOv8 Classify models for image classification. Get detailed information on List of Pretrained Models & how to Train, Validate, Predict & Export models.

|

||||

keywords: Ultralytics, YOLOv8, Image Classification, Pretrained Models, YOLOv8n-cls, Training, Validation, Prediction, Model Export

|

||||

---

|

||||

|

||||

# Image Classification

|

||||

|

||||

<img width="1024" src="https://user-images.githubusercontent.com/26833433/243418606-adf35c62-2e11-405d-84c6-b84e7d013804.png" alt="Image classification examples">

|

||||

|

||||

Image classification is the simplest of the three tasks and involves classifying an entire image into one of a set of predefined classes.

|

||||

|

||||

The output of an image classifier is a single class label and a confidence score. Image classification is useful when you need to know only what class an image belongs to and don't need to know where objects of that class are located or what their exact shape is.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/5BO0Il_YYAg"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Explore Ultralytics YOLO Tasks: Image Classification using Ultralytics HUB

|

||||

</p>

|

||||

|

||||

!!! Tip "Tip"

|

||||

|

||||

YOLOv8 Classify models use the `-cls` suffix, i.e. `yolov8n-cls.pt` and are pretrained on [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/ImageNet.yaml).

|

||||

|

||||

## [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models/v8)

|

||||

|

||||

YOLOv8 pretrained Classify models are shown here. Detect, Segment and Pose models are pretrained on the [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml) dataset, while Classify models are pretrained on the [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/ImageNet.yaml) dataset.

|

||||

|

||||

[Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

|

||||

|

||||

| Model | size<br><sup>(pixels) | acc<br><sup>top1 | acc<br><sup>top5 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) at 640 |

|

||||

|----------------------------------------------------------------------------------------------|-----------------------|------------------|------------------|--------------------------------|-------------------------------------|--------------------|--------------------------|

|

||||

| [YOLOv8n-cls](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8n-cls.pt) | 224 | 69.0 | 88.3 | 12.9 | 0.31 | 2.7 | 4.3 |

|

||||

| [YOLOv8s-cls](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8s-cls.pt) | 224 | 73.8 | 91.7 | 23.4 | 0.35 | 6.4 | 13.5 |

|

||||

| [YOLOv8m-cls](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8m-cls.pt) | 224 | 76.8 | 93.5 | 85.4 | 0.62 | 17.0 | 42.7 |

|

||||

| [YOLOv8l-cls](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8l-cls.pt) | 224 | 76.8 | 93.5 | 163.0 | 0.87 | 37.5 | 99.7 |

|

||||

| [YOLOv8x-cls](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8x-cls.pt) | 224 | 79.0 | 94.6 | 232.0 | 1.01 | 57.4 | 154.8 |

|

||||

|

||||

- **acc** values are model accuracies on the [ImageNet](https://www.image-net.org/) dataset validation set. <br>Reproduce by `yolo val classify data=path/to/ImageNet device=0`

|

||||

- **Speed** averaged over ImageNet val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance. <br>Reproduce by `yolo val classify data=path/to/ImageNet batch=1 device=0|cpu`

|

||||

|

||||

## Train

|

||||

|

||||

Train YOLOv8n-cls on the MNIST160 dataset for 100 epochs at image size 64. For a full list of available arguments see the [Configuration](../usage/cfg.md) page.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.yaml') # build a new model from YAML

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n-cls.yaml').load('yolov8n-cls.pt') # build from YAML and transfer weights

|

||||

|

||||

# Train the model

|

||||

results = model.train(data='mnist160', epochs=100, imgsz=64)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Build a new model from YAML and start training from scratch

|

||||

yolo classify train data=mnist160 model=yolov8n-cls.yaml epochs=100 imgsz=64

|

||||

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo classify train data=mnist160 model=yolov8n-cls.pt epochs=100 imgsz=64

|

||||

|

||||

# Build a new model from YAML, transfer pretrained weights to it and start training

|

||||

yolo classify train data=mnist160 model=yolov8n-cls.yaml pretrained=yolov8n-cls.pt epochs=100 imgsz=64

|

||||

```

|

||||

|

||||

### Dataset format

|

||||

|

||||

YOLO classification dataset format can be found in detail in the [Dataset Guide](../datasets/classify/index.md).

|

||||

|

||||

## Val

|

||||

|

||||

Validate trained YOLOv8n-cls model accuracy on the MNIST160 dataset. No argument need to passed as the `model` retains it's training `data` and arguments as model attributes.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Validate the model

|

||||

metrics = model.val() # no arguments needed, dataset and settings remembered

|

||||

metrics.top1 # top1 accuracy

|

||||

metrics.top5 # top5 accuracy

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo classify val model=yolov8n-cls.pt # val official model

|

||||

yolo classify val model=path/to/best.pt # val custom model

|

||||

```

|

||||

|

||||

## Predict

|

||||

|

||||

Use a trained YOLOv8n-cls model to run predictions on images.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Predict with the model

|

||||

results = model('https://ultralytics.com/images/bus.jpg') # predict on an image

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo classify predict model=yolov8n-cls.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

|

||||

yolo classify predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom model

|

||||

```

|

||||

|

||||

See full `predict` mode details in the [Predict](https://docs.ultralytics.com/modes/predict/) page.

|

||||

|

||||

## Export

|

||||

|

||||

Export a YOLOv8n-cls model to a different format like ONNX, CoreML, etc.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom trained model

|

||||

|

||||

# Export the model

|

||||

model.export(format='onnx')

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo export model=yolov8n-cls.pt format=onnx # export official model

|

||||

yolo export model=path/to/best.pt format=onnx # export custom trained model

|

||||

```

|

||||

|

||||

Available YOLOv8-cls export formats are in the table below. You can predict or validate directly on exported models, i.e. `yolo predict model=yolov8n-cls.onnx`. Usage examples are shown for your model after export completes.

|

||||

|

||||

| Format | `format` Argument | Model | Metadata | Arguments |

|

||||

|--------------------------------------------------------------------|-------------------|-------------------------------|----------|-----------------------------------------------------|

|

||||

| [PyTorch](https://pytorch.org/) | - | `yolov8n-cls.pt` | ✅ | - |

|

||||

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n-cls.torchscript` | ✅ | `imgsz`, `optimize` |

|

||||

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n-cls.onnx` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `opset` |

|

||||

| [OpenVINO](../integrations/openvino.md) | `openvino` | `yolov8n-cls_openvino_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n-cls.engine` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `workspace` |

|

||||

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n-cls.mlpackage` | ✅ | `imgsz`, `half`, `int8`, `nms` |

|

||||

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n-cls_saved_model/` | ✅ | `imgsz`, `keras` |

|

||||

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n-cls.pb` | ❌ | `imgsz` |

|

||||

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n-cls.tflite` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n-cls_edgetpu.tflite` | ✅ | `imgsz` |

|

||||

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n-cls_web_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n-cls_paddle_model/` | ✅ | `imgsz` |

|

||||

| [NCNN](https://github.com/Tencent/ncnn) | `ncnn` | `yolov8n-cls_ncnn_model/` | ✅ | `imgsz`, `half` |

|

||||

|

||||

See full `export` details in the [Export](https://docs.ultralytics.com/modes/export/) page.

|

||||

182

docs/en/tasks/detect.md

Normal file

182

docs/en/tasks/detect.md

Normal file

@ -0,0 +1,182 @@

|

||||

---

|

||||

comments: true

|

||||

description: Official documentation for YOLOv8 by Ultralytics. Learn how to train, validate, predict and export models in various formats. Including detailed performance stats.

|

||||

keywords: YOLOv8, Ultralytics, object detection, pretrained models, training, validation, prediction, export models, COCO, ImageNet, PyTorch, ONNX, CoreML

|

||||

---

|

||||

|

||||

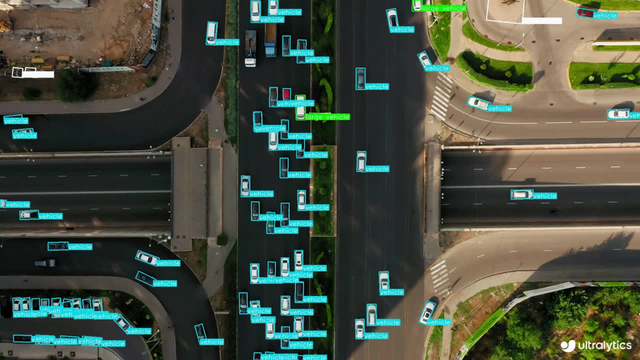

# Object Detection

|

||||

|

||||

<img width="1024" src="https://user-images.githubusercontent.com/26833433/243418624-5785cb93-74c9-4541-9179-d5c6782d491a.png" alt="Object detection examples">

|

||||

|

||||

Object detection is a task that involves identifying the location and class of objects in an image or video stream.

|

||||

|

||||

The output of an object detector is a set of bounding boxes that enclose the objects in the image, along with class labels and confidence scores for each box. Object detection is a good choice when you need to identify objects of interest in a scene, but don't need to know exactly where the object is or its exact shape.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/5ku7npMrW40?si=6HQO1dDXunV8gekh"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Object Detection with Pre-trained Ultralytics YOLOv8 Model.

|

||||

</p>

|

||||

|

||||

!!! Tip "Tip"

|

||||

|

||||

YOLOv8 Detect models are the default YOLOv8 models, i.e. `yolov8n.pt` and are pretrained on [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml).

|

||||

|

||||

## [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models/v8)

|

||||

|

||||

YOLOv8 pretrained Detect models are shown here. Detect, Segment and Pose models are pretrained on the [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml) dataset, while Classify models are pretrained on the [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/ImageNet.yaml) dataset.

|

||||

|

||||

[Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

|

||||

|

||||

| Model | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

|

||||

|--------------------------------------------------------------------------------------|-----------------------|----------------------|--------------------------------|-------------------------------------|--------------------|-------------------|

|

||||

| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8n.pt) | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 |

|

||||

| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8s.pt) | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 |

|

||||

| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8m.pt) | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 |

|

||||

| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8l.pt) | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 |

|

||||

| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8x.pt) | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 |

|

||||

|

||||

- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](https://cocodataset.org) dataset. <br>Reproduce by `yolo val detect data=coco.yaml device=0`

|

||||

- **Speed** averaged over COCO val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance. <br>Reproduce by `yolo val detect data=coco128.yaml batch=1 device=0|cpu`

|

||||

|

||||

## Train

|

||||

|

||||

Train YOLOv8n on the COCO128 dataset for 100 epochs at image size 640. For a full list of available arguments see the [Configuration](../usage/cfg.md) page.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.yaml') # build a new model from YAML

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n.yaml').load('yolov8n.pt') # build from YAML and transfer weights

|

||||

|

||||

# Train the model

|

||||

results = model.train(data='coco128.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Build a new model from YAML and start training from scratch

|

||||

yolo detect train data=coco128.yaml model=yolov8n.yaml epochs=100 imgsz=640

|

||||

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco128.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

|

||||

# Build a new model from YAML, transfer pretrained weights to it and start training

|

||||

yolo detect train data=coco128.yaml model=yolov8n.yaml pretrained=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

### Dataset format

|

||||

|

||||

YOLO detection dataset format can be found in detail in the [Dataset Guide](../datasets/detect/index.md). To convert your existing dataset from other formats (like COCO etc.) to YOLO format, please use [JSON2YOLO](https://github.com/ultralytics/JSON2YOLO) tool by Ultralytics.

|

||||

|

||||

## Val

|

||||

|

||||

Validate trained YOLOv8n model accuracy on the COCO128 dataset. No argument need to passed as the `model` retains it's training `data` and arguments as model attributes.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Validate the model

|

||||

metrics = model.val() # no arguments needed, dataset and settings remembered

|

||||

metrics.box.map # map50-95

|

||||

metrics.box.map50 # map50

|

||||

metrics.box.map75 # map75

|

||||

metrics.box.maps # a list contains map50-95 of each category

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo detect val model=yolov8n.pt # val official model

|

||||

yolo detect val model=path/to/best.pt # val custom model

|

||||

```

|

||||

|

||||

## Predict

|

||||

|

||||

Use a trained YOLOv8n model to run predictions on images.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Predict with the model

|

||||

results = model('https://ultralytics.com/images/bus.jpg') # predict on an image

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo detect predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

|

||||

yolo detect predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom model

|

||||

```

|

||||

|

||||

See full `predict` mode details in the [Predict](https://docs.ultralytics.com/modes/predict/) page.

|

||||

|

||||

## Export

|

||||

|

||||

Export a YOLOv8n model to a different format like ONNX, CoreML, etc.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom trained model

|

||||

|

||||

# Export the model

|

||||

model.export(format='onnx')

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo export model=yolov8n.pt format=onnx # export official model

|

||||

yolo export model=path/to/best.pt format=onnx # export custom trained model

|

||||

```

|

||||

|

||||

Available YOLOv8 export formats are in the table below. You can predict or validate directly on exported models, i.e. `yolo predict model=yolov8n.onnx`. Usage examples are shown for your model after export completes.

|

||||

|

||||

| Format | `format` Argument | Model | Metadata | Arguments |

|

||||

|--------------------------------------------------------------------|-------------------|---------------------------|----------|-----------------------------------------------------|

|

||||

| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ | - |

|

||||

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ | `imgsz`, `optimize` |

|

||||

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `opset` |

|

||||

| [OpenVINO](../integrations/openvino.md) | `openvino` | `yolov8n_openvino_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `workspace` |

|

||||

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlpackage` | ✅ | `imgsz`, `half`, `int8`, `nms` |

|

||||

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ | `imgsz`, `keras`, `int8` |

|

||||

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ | `imgsz` |

|

||||

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ | `imgsz` |

|

||||

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ | `imgsz` |

|

||||

| [NCNN](https://github.com/Tencent/ncnn) | `ncnn` | `yolov8n_ncnn_model/` | ✅ | `imgsz`, `half` |

|

||||

|

||||

See full `export` details in the [Export](https://docs.ultralytics.com/modes/export/) page.

|

||||

57

docs/en/tasks/index.md

Normal file

57

docs/en/tasks/index.md

Normal file

@ -0,0 +1,57 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the cornerstone computer vision tasks YOLOv8 can perform including detection, segmentation, classification, and pose estimation. Understand their uses in your AI projects.

|

||||

keywords: Ultralytics, YOLOv8, Detection, Segmentation, Classification, Pose Estimation, Oriented Object Detection, AI Framework, Computer Vision Tasks

|

||||

---

|

||||

|

||||

# Ultralytics YOLOv8 Tasks

|

||||

|

||||

<br>

|

||||

<img width="1024" src="https://raw.githubusercontent.com/ultralytics/assets/main/im/banner-tasks.png" alt="Ultralytics YOLO supported tasks">

|

||||

|

||||

YOLOv8 is an AI framework that supports multiple computer vision **tasks**. The framework can be used to perform [detection](detect.md), [segmentation](segment.md), [obb](obb.md), [classification](classify.md), and [pose](pose.md) estimation. Each of these tasks has a different objective and use case.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/NAs-cfq9BDw"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Explore Ultralytics YOLO Tasks: Object Detection, Segmentation, OBB, Tracking, and Pose Estimation.

|

||||

</p>

|

||||

|

||||

## [Detection](detect.md)

|

||||

|

||||

Detection is the primary task supported by YOLOv8. It involves detecting objects in an image or video frame and drawing bounding boxes around them. The detected objects are classified into different categories based on their features. YOLOv8 can detect multiple objects in a single image or video frame with high accuracy and speed.

|

||||

|

||||

[Detection Examples](detect.md){ .md-button }

|

||||

|

||||

## [Segmentation](segment.md)

|

||||

|

||||

Segmentation is a task that involves segmenting an image into different regions based on the content of the image. Each region is assigned a label based on its content. This task is useful in applications such as image segmentation and medical imaging. YOLOv8 uses a variant of the U-Net architecture to perform segmentation.

|

||||

|

||||

[Segmentation Examples](segment.md){ .md-button }

|

||||

|

||||

## [Classification](classify.md)

|

||||

|

||||

Classification is a task that involves classifying an image into different categories. YOLOv8 can be used to classify images based on their content. It uses a variant of the EfficientNet architecture to perform classification.

|

||||

|

||||

[Classification Examples](classify.md){ .md-button }

|

||||

|

||||

## [Pose](pose.md)

|

||||

|

||||

Pose/keypoint detection is a task that involves detecting specific points in an image or video frame. These points are referred to as keypoints and are used to track movement or pose estimation. YOLOv8 can detect keypoints in an image or video frame with high accuracy and speed.

|

||||

|

||||

[Pose Examples](pose.md){ .md-button }

|

||||

|

||||

## [OBB](obb.md)

|

||||

|

||||

Oriented object detection goes a step further than regular object detection with introducing an extra angle to locate objects more accurate in an image. YOLOv8 can detect rotated objects in an image or video frame with high accuracy and speed.

|

||||

|

||||

[Oriented Detection](obb.md){ .md-button }

|

||||

|

||||

## Conclusion

|

||||

|

||||

YOLOv8 supports multiple tasks, including detection, segmentation, classification, oriented object detection and keypoints detection. Each of these tasks has different objectives and use cases. By understanding the differences between these tasks, you can choose the appropriate task for your computer vision application.

|

||||

191

docs/en/tasks/obb.md

Normal file

191

docs/en/tasks/obb.md

Normal file

@ -0,0 +1,191 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn how to use oriented object detection models with Ultralytics YOLO. Instructions on training, validation, image prediction, and model export.

|

||||

keywords: yolov8, oriented object detection, Ultralytics, DOTA dataset, rotated object detection, object detection, model training, model validation, image prediction, model export

|

||||

---

|

||||

|

||||

# Oriented Bounding Boxes Object Detection

|

||||

|

||||

<!-- obb task poster -->

|

||||

|

||||

Oriented object detection goes a step further than object detection and introduce an extra angle to locate objects more accurate in an image.

|

||||

|

||||

The output of an oriented object detector is a set of rotated bounding boxes that exactly enclose the objects in the image, along with class labels and confidence scores for each box. Object detection is a good choice when you need to identify objects of interest in a scene, but don't need to know exactly where the object is or its exact shape.

|

||||

|

||||

<!-- youtube video link for obb task -->

|

||||

|

||||

!!! Tip "Tip"

|

||||

|

||||

YOLOv8 OBB models use the `-obb` suffix, i.e. `yolov8n-obb.pt` and are pretrained on [DOTAv1](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/DOTAv1.yaml).

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/Z7Z9pHF8wJc"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Object Detection using Ultralytics YOLOv8 Oriented Bounding Boxes (YOLOv8-OBB)

|

||||

</p>

|

||||

|

||||

## Visual Samples

|

||||

|

||||

| Ships Detection using OBB | Vehicle Detection using OBB |

|

||||

|:-------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------:|

|

||||

|  |  |

|

||||

|

||||

## [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models/v8)

|

||||

|

||||

YOLOv8 pretrained OBB models are shown here, which are pretrained on the [DOTAv1](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/DOTAv1.yaml) dataset.

|

||||

|

||||

[Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

|

||||

|

||||

| Model | size<br><sup>(pixels) | mAP<sup>test<br>50 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

|

||||

|----------------------------------------------------------------------------------------------|-----------------------|--------------------|--------------------------------|-------------------------------------|--------------------|-------------------|

|

||||

| [YOLOv8n-obb](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8n-obb.pt) | 1024 | 78.0 | 204.77 | 3.57 | 3.1 | 23.3 |

|

||||

| [YOLOv8s-obb](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8s-obb.pt) | 1024 | 79.5 | 424.88 | 4.07 | 11.4 | 76.3 |

|

||||

| [YOLOv8m-obb](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8m-obb.pt) | 1024 | 80.5 | 763.48 | 7.61 | 26.4 | 208.6 |

|

||||

| [YOLOv8l-obb](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8l-obb.pt) | 1024 | 80.7 | 1278.42 | 11.83 | 44.5 | 433.8 |

|

||||

| [YOLOv8x-obb](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8x-obb.pt) | 1024 | 81.36 | 1759.10 | 13.23 | 69.5 | 676.7 |

|

||||

|

||||

- **mAP<sup>test</sup>** values are for single-model multiscale on [DOTAv1 test](https://captain-whu.github.io/DOTA/index.html) dataset. <br>Reproduce by `yolo val obb data=DOTAv1.yaml device=0 split=test` and submit merged results to [DOTA evaluation](https://captain-whu.github.io/DOTA/evaluation.html).

|

||||

- **Speed** averaged over DOTAv1 val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance. <br>Reproduce by `yolo val obb data=DOTAv1.yaml batch=1 device=0|cpu`

|

||||

|

||||

## Train

|

||||

|

||||

Train YOLOv8n-obb on the `dota8.yaml` dataset for 100 epochs at image size 640. For a full list of available arguments see the [Configuration](../usage/cfg.md) page.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-obb.yaml') # build a new model from YAML

|

||||

model = YOLO('yolov8n-obb.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n-obb.yaml').load('yolov8n.pt') # build from YAML and transfer weights

|

||||

|

||||

# Train the model

|

||||

results = model.train(data='dota8.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Build a new model from YAML and start training from scratch

|

||||

yolo obb train data=dota8.yaml model=yolov8n-obb.yaml epochs=100 imgsz=640

|

||||

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo obb train data=dota8.yaml model=yolov8n-obb.pt epochs=100 imgsz=640

|

||||

|

||||

# Build a new model from YAML, transfer pretrained weights to it and start training

|

||||

yolo obb train data=dota8.yaml model=yolov8n-obb.yaml pretrained=yolov8n-obb.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

### Dataset format

|

||||

|

||||

OBB dataset format can be found in detail in the [Dataset Guide](../datasets/obb/index.md).

|

||||

|

||||

## Val

|

||||

|

||||

Validate trained YOLOv8n-obb model accuracy on the DOTA8 dataset. No argument need to passed as the `model`

|

||||

retains it's training `data` and arguments as model attributes.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-obb.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Validate the model

|

||||

metrics = model.val(data='dota8.yaml') # no arguments needed, dataset and settings remembered

|

||||

metrics.box.map # map50-95(B)

|

||||

metrics.box.map50 # map50(B)

|

||||

metrics.box.map75 # map75(B)

|

||||

metrics.box.maps # a list contains map50-95(B) of each category

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo obb val model=yolov8n-obb.pt data=dota8.yaml # val official model

|

||||

yolo obb val model=path/to/best.pt data=path/to/data.yaml # val custom model

|

||||

```

|

||||

|

||||

## Predict

|

||||

|

||||

Use a trained YOLOv8n-obb model to run predictions on images.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-obb.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Predict with the model

|

||||

results = model('https://ultralytics.com/images/bus.jpg') # predict on an image

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo obb predict model=yolov8n-obb.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

|

||||

yolo obb predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom model

|

||||

```

|

||||

|

||||

See full `predict` mode details in the [Predict](https://docs.ultralytics.com/modes/predict/) page.

|

||||

|

||||

## Export

|

||||

|

||||

Export a YOLOv8n-obb model to a different format like ONNX, CoreML, etc.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-obb.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom trained model

|

||||

|

||||

# Export the model

|

||||

model.export(format='onnx')

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo export model=yolov8n-obb.pt format=onnx # export official model

|

||||

yolo export model=path/to/best.pt format=onnx # export custom trained model

|

||||

```

|

||||

|

||||

Available YOLOv8-obb export formats are in the table below. You can predict or validate directly on exported models, i.e. `yolo predict model=yolov8n-obb.onnx`. Usage examples are shown for your model after export completes.

|

||||

|

||||

| Format | `format` Argument | Model | Metadata | Arguments |

|

||||

|--------------------------------------------------------------------|-------------------|-------------------------------|----------|-----------------------------------------------------|

|

||||

| [PyTorch](https://pytorch.org/) | - | `yolov8n-obb.pt` | ✅ | - |

|

||||

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n-obb.torchscript` | ✅ | `imgsz`, `optimize` |

|

||||

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n-obb.onnx` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `opset` |

|

||||

| [OpenVINO](../integrations/openvino.md) | `openvino` | `yolov8n-obb_openvino_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n-obb.engine` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `workspace` |

|

||||

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n-obb.mlpackage` | ✅ | `imgsz`, `half`, `int8`, `nms` |

|

||||

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n-obb_saved_model/` | ✅ | `imgsz`, `keras` |

|

||||

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n-obb.pb` | ❌ | `imgsz` |

|

||||

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n-obb.tflite` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n-obb_edgetpu.tflite` | ✅ | `imgsz` |

|

||||

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n-obb_web_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n-obb_paddle_model/` | ✅ | `imgsz` |

|

||||

| [NCNN](https://github.com/Tencent/ncnn) | `ncnn` | `yolov8n-obb_ncnn_model/` | ✅ | `imgsz`, `half` |

|

||||

|

||||

See full `export` details in the [Export](https://docs.ultralytics.com/modes/export/) page.

|

||||

197

docs/en/tasks/pose.md

Normal file

197

docs/en/tasks/pose.md

Normal file

@ -0,0 +1,197 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn how to use Ultralytics YOLOv8 for pose estimation tasks. Find pretrained models, learn how to train, validate, predict, and export your own.

|

||||

keywords: Ultralytics, YOLO, YOLOv8, pose estimation, keypoints detection, object detection, pre-trained models, machine learning, artificial intelligence

|

||||

---

|

||||

|

||||

# Pose Estimation

|

||||

|

||||

<img width="1024" src="https://user-images.githubusercontent.com/26833433/243418616-9811ac0b-a4a7-452a-8aba-484ba32bb4a8.png" alt="Pose estimation examples">

|

||||

|

||||

Pose estimation is a task that involves identifying the location of specific points in an image, usually referred to as keypoints. The keypoints can represent various parts of the object such as joints, landmarks, or other distinctive features. The locations of the keypoints are usually represented as a set of 2D `[x, y]` or 3D `[x, y, visible]` coordinates.

|

||||

|

||||

The output of a pose estimation model is a set of points that represent the keypoints on an object in the image, usually along with the confidence scores for each point. Pose estimation is a good choice when you need to identify specific parts of an object in a scene, and their location in relation to each other.

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td align="center">

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/Y28xXQmju64?si=pCY4ZwejZFu6Z4kZ"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Pose Estimation with Ultralytics YOLOv8.

|

||||

</td>

|

||||

<td align="center">

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/aeAX6vWpfR0"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Pose Estimation with Ultralytics HUB.

|

||||

</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

!!! Tip "Tip"

|

||||

|

||||

YOLOv8 _pose_ models use the `-pose` suffix, i.e. `yolov8n-pose.pt`. These models are trained on the [COCO keypoints](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco-pose.yaml) dataset and are suitable for a variety of pose estimation tasks.

|

||||

|

||||

## [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models/v8)

|

||||

|

||||

YOLOv8 pretrained Pose models are shown here. Detect, Segment and Pose models are pretrained on the [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml) dataset, while Classify models are pretrained on the [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/ImageNet.yaml) dataset.

|

||||

|

||||

[Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

|

||||

|

||||

| Model | size<br><sup>(pixels) | mAP<sup>pose<br>50-95 | mAP<sup>pose<br>50 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

|

||||

|------------------------------------------------------------------------------------------------------|-----------------------|-----------------------|--------------------|--------------------------------|-------------------------------------|--------------------|-------------------|

|

||||

| [YOLOv8n-pose](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8n-pose.pt) | 640 | 50.4 | 80.1 | 131.8 | 1.18 | 3.3 | 9.2 |

|

||||

| [YOLOv8s-pose](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8s-pose.pt) | 640 | 60.0 | 86.2 | 233.2 | 1.42 | 11.6 | 30.2 |

|

||||

| [YOLOv8m-pose](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8m-pose.pt) | 640 | 65.0 | 88.8 | 456.3 | 2.00 | 26.4 | 81.0 |

|

||||

| [YOLOv8l-pose](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8l-pose.pt) | 640 | 67.6 | 90.0 | 784.5 | 2.59 | 44.4 | 168.6 |

|

||||

| [YOLOv8x-pose](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8x-pose.pt) | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 |

|

||||

| [YOLOv8x-pose-p6](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8x-pose-p6.pt) | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 |

|

||||

|

||||

- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO Keypoints val2017](https://cocodataset.org) dataset. <br>Reproduce by `yolo val pose data=coco-pose.yaml device=0`

|

||||

- **Speed** averaged over COCO val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance. <br>Reproduce by `yolo val pose data=coco8-pose.yaml batch=1 device=0|cpu`

|

||||

|

||||

## Train

|

||||

|

||||

Train a YOLOv8-pose model on the COCO128-pose dataset.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-pose.yaml') # build a new model from YAML

|

||||

model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n-pose.yaml').load('yolov8n-pose.pt') # build from YAML and transfer weights

|

||||

|

||||

# Train the model

|

||||

results = model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Build a new model from YAML and start training from scratch

|

||||

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.yaml epochs=100 imgsz=640

|

||||

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.pt epochs=100 imgsz=640

|

||||

|

||||

# Build a new model from YAML, transfer pretrained weights to it and start training

|

||||

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.yaml pretrained=yolov8n-pose.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

### Dataset format

|

||||

|

||||

YOLO pose dataset format can be found in detail in the [Dataset Guide](../datasets/pose/index.md). To convert your existing dataset from other formats (like COCO etc.) to YOLO format, please use [JSON2YOLO](https://github.com/ultralytics/JSON2YOLO) tool by Ultralytics.

|

||||

|

||||

## Val

|

||||

|

||||

Validate trained YOLOv8n-pose model accuracy on the COCO128-pose dataset. No argument need to passed as the `model`

|

||||

retains it's training `data` and arguments as model attributes.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-pose.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Validate the model

|

||||

metrics = model.val() # no arguments needed, dataset and settings remembered

|

||||

metrics.box.map # map50-95

|

||||

metrics.box.map50 # map50

|

||||

metrics.box.map75 # map75

|

||||

metrics.box.maps # a list contains map50-95 of each category

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo pose val model=yolov8n-pose.pt # val official model

|

||||

yolo pose val model=path/to/best.pt # val custom model

|

||||

```

|

||||

|

||||

## Predict

|

||||

|

||||

Use a trained YOLOv8n-pose model to run predictions on images.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-pose.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Predict with the model

|

||||

results = model('https://ultralytics.com/images/bus.jpg') # predict on an image

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo pose predict model=yolov8n-pose.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

|

||||

yolo pose predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom model

|

||||

```

|

||||

|

||||

See full `predict` mode details in the [Predict](https://docs.ultralytics.com/modes/predict/) page.

|

||||

|

||||

## Export

|

||||

|

||||

Export a YOLOv8n Pose model to a different format like ONNX, CoreML, etc.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-pose.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom trained model

|

||||

|

||||

# Export the model

|

||||

model.export(format='onnx')

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo export model=yolov8n-pose.pt format=onnx # export official model

|

||||

yolo export model=path/to/best.pt format=onnx # export custom trained model

|

||||

```

|

||||

|

||||

Available YOLOv8-pose export formats are in the table below. You can predict or validate directly on exported models, i.e. `yolo predict model=yolov8n-pose.onnx`. Usage examples are shown for your model after export completes.

|

||||

|

||||

| Format | `format` Argument | Model | Metadata | Arguments |

|

||||

|--------------------------------------------------------------------|-------------------|--------------------------------|----------|-----------------------------------------------------|

|

||||

| [PyTorch](https://pytorch.org/) | - | `yolov8n-pose.pt` | ✅ | - |

|

||||

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n-pose.torchscript` | ✅ | `imgsz`, `optimize` |

|

||||

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n-pose.onnx` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `opset` |

|

||||

| [OpenVINO](../integrations/openvino.md) | `openvino` | `yolov8n-pose_openvino_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n-pose.engine` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `workspace` |

|

||||

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n-pose.mlpackage` | ✅ | `imgsz`, `half`, `int8`, `nms` |

|

||||

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n-pose_saved_model/` | ✅ | `imgsz`, `keras` |

|

||||

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n-pose.pb` | ❌ | `imgsz` |

|

||||

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n-pose.tflite` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n-pose_edgetpu.tflite` | ✅ | `imgsz` |

|

||||

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n-pose_web_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n-pose_paddle_model/` | ✅ | `imgsz` |

|

||||

| [NCNN](https://github.com/Tencent/ncnn) | `ncnn` | `yolov8n-pose_ncnn_model/` | ✅ | `imgsz`, `half` |

|

||||

|

||||

See full `export` details in the [Export](https://docs.ultralytics.com/modes/export/) page.

|

||||

187

docs/en/tasks/segment.md

Normal file

187

docs/en/tasks/segment.md

Normal file

@ -0,0 +1,187 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn how to use instance segmentation models with Ultralytics YOLO. Instructions on training, validation, image prediction, and model export.

|

||||

keywords: yolov8, instance segmentation, Ultralytics, COCO dataset, image segmentation, object detection, model training, model validation, image prediction, model export

|

||||

---

|

||||

|

||||

# Instance Segmentation

|

||||

|

||||

<img width="1024" src="https://user-images.githubusercontent.com/26833433/243418644-7df320b8-098d-47f1-85c5-26604d761286.png" alt="Instance segmentation examples">

|

||||

|

||||

Instance segmentation goes a step further than object detection and involves identifying individual objects in an image and segmenting them from the rest of the image.

|

||||

|

||||

The output of an instance segmentation model is a set of masks or contours that outline each object in the image, along with class labels and confidence scores for each object. Instance segmentation is useful when you need to know not only where objects are in an image, but also what their exact shape is.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/o4Zd-IeMlSY?si=37nusCzDTd74Obsp"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Run Segmentation with Pre-Trained Ultralytics YOLOv8 Model in Python.

|

||||

</p>

|

||||

|

||||

!!! Tip "Tip"

|

||||

|

||||

YOLOv8 Segment models use the `-seg` suffix, i.e. `yolov8n-seg.pt` and are pretrained on [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml).

|

||||

|

||||

## [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models/v8)

|

||||

|

||||

YOLOv8 pretrained Segment models are shown here. Detect, Segment and Pose models are pretrained on the [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml) dataset, while Classify models are pretrained on the [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/ImageNet.yaml) dataset.

|

||||

|

||||

[Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

|

||||

|

||||

| Model | size<br><sup>(pixels) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

|

||||

|----------------------------------------------------------------------------------------------|-----------------------|----------------------|-----------------------|--------------------------------|-------------------------------------|--------------------|-------------------|

|

||||

| [YOLOv8n-seg](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8n-seg.pt) | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 |

|

||||

| [YOLOv8s-seg](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8s-seg.pt) | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 |

|

||||

| [YOLOv8m-seg](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8m-seg.pt) | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 |

|

||||

| [YOLOv8l-seg](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8l-seg.pt) | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 |

|

||||

| [YOLOv8x-seg](https://github.com/ultralytics/assets/releases/download/v8.1.0/yolov8x-seg.pt) | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 |

|

||||

|

||||

- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](https://cocodataset.org) dataset. <br>Reproduce by `yolo val segment data=coco.yaml device=0`

|

||||

- **Speed** averaged over COCO val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance. <br>Reproduce by `yolo val segment data=coco128-seg.yaml batch=1 device=0|cpu`

|

||||

|

||||

## Train

|

||||

|

||||

Train YOLOv8n-seg on the COCO128-seg dataset for 100 epochs at image size 640. For a full list of available arguments see the [Configuration](../usage/cfg.md) page.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-seg.yaml') # build a new model from YAML

|

||||

model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n-seg.yaml').load('yolov8n.pt') # build from YAML and transfer weights

|

||||

|

||||

# Train the model

|

||||

results = model.train(data='coco128-seg.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Build a new model from YAML and start training from scratch

|

||||

yolo segment train data=coco128-seg.yaml model=yolov8n-seg.yaml epochs=100 imgsz=640

|

||||

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo segment train data=coco128-seg.yaml model=yolov8n-seg.pt epochs=100 imgsz=640

|

||||

|

||||

# Build a new model from YAML, transfer pretrained weights to it and start training

|

||||

yolo segment train data=coco128-seg.yaml model=yolov8n-seg.yaml pretrained=yolov8n-seg.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

### Dataset format

|

||||

|

||||

YOLO segmentation dataset format can be found in detail in the [Dataset Guide](../datasets/segment/index.md). To convert your existing dataset from other formats (like COCO etc.) to YOLO format, please use [JSON2YOLO](https://github.com/ultralytics/JSON2YOLO) tool by Ultralytics.

|

||||

|

||||

## Val

|

||||

|

||||

Validate trained YOLOv8n-seg model accuracy on the COCO128-seg dataset. No argument need to passed as the `model`

|

||||

retains it's training `data` and arguments as model attributes.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-seg.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Validate the model

|

||||

metrics = model.val() # no arguments needed, dataset and settings remembered

|

||||

metrics.box.map # map50-95(B)

|

||||

metrics.box.map50 # map50(B)

|

||||

metrics.box.map75 # map75(B)

|

||||

metrics.box.maps # a list contains map50-95(B) of each category

|

||||

metrics.seg.map # map50-95(M)

|

||||

metrics.seg.map50 # map50(M)

|

||||

metrics.seg.map75 # map75(M)

|

||||

metrics.seg.maps # a list contains map50-95(M) of each category

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo segment val model=yolov8n-seg.pt # val official model

|

||||

yolo segment val model=path/to/best.pt # val custom model

|

||||

```

|

||||

|

||||

## Predict

|

||||

|

||||

Use a trained YOLOv8n-seg model to run predictions on images.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-seg.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom model

|

||||

|

||||

# Predict with the model

|

||||

results = model('https://ultralytics.com/images/bus.jpg') # predict on an image

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo segment predict model=yolov8n-seg.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

|

||||

yolo segment predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom model

|

||||

```

|

||||

|

||||

See full `predict` mode details in the [Predict](https://docs.ultralytics.com/modes/predict/) page.

|

||||

|

||||

## Export

|

||||

|

||||

Export a YOLOv8n-seg model to a different format like ONNX, CoreML, etc.

|

||||

|

||||

!!! Example

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-seg.pt') # load an official model

|

||||

model = YOLO('path/to/best.pt') # load a custom trained model

|

||||

|

||||

# Export the model

|

||||

model.export(format='onnx')

|

||||

```

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

yolo export model=yolov8n-seg.pt format=onnx # export official model

|

||||

yolo export model=path/to/best.pt format=onnx # export custom trained model

|

||||

```

|

||||

|

||||

Available YOLOv8-seg export formats are in the table below. You can predict or validate directly on exported models, i.e. `yolo predict model=yolov8n-seg.onnx`. Usage examples are shown for your model after export completes.

|

||||

|

||||

| Format | `format` Argument | Model | Metadata | Arguments |

|

||||

|--------------------------------------------------------------------|-------------------|-------------------------------|----------|-----------------------------------------------------|

|

||||

| [PyTorch](https://pytorch.org/) | - | `yolov8n-seg.pt` | ✅ | - |

|

||||

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n-seg.torchscript` | ✅ | `imgsz`, `optimize` |

|

||||

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n-seg.onnx` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `opset` |

|

||||

| [OpenVINO](../integrations/openvino.md) | `openvino` | `yolov8n-seg_openvino_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n-seg.engine` | ✅ | `imgsz`, `half`, `dynamic`, `simplify`, `workspace` |

|

||||

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n-seg.mlpackage` | ✅ | `imgsz`, `half`, `int8`, `nms` |

|

||||

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n-seg_saved_model/` | ✅ | `imgsz`, `keras` |

|

||||

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n-seg.pb` | ❌ | `imgsz` |

|

||||

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n-seg.tflite` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n-seg_edgetpu.tflite` | ✅ | `imgsz` |

|

||||

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n-seg_web_model/` | ✅ | `imgsz`, `half`, `int8` |

|

||||

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n-seg_paddle_model/` | ✅ | `imgsz` |

|

||||

| [NCNN](https://github.com/Tencent/ncnn) | `ncnn` | `yolov8n-seg_ncnn_model/` | ✅ | `imgsz`, `half` |

|

||||

|

||||

See full `export` details in the [Export](https://docs.ultralytics.com/modes/export/) page.

|

||||

Reference in New Issue

Block a user